Matt Strassler [August 27 – September 9, 2013]

What is “Naturalness”?

[This subject is closely related to the hierarchy problem.]

What do particle physicists and string theorists mean when they refer to a particular array of particles and forces as “natural”? They don’t mean “part of nature”. Everything in the universe is part of nature, by definition.

The word “natural” has multiple meanings. The one that scientists are using in this context isn’t “having to do with nature” but rather “typical” or “or “generic” — “just what you’d have expected”, or “the usual” — as in, “naturally the baby started screaming when she bumped her head”, or “naturally it costs more to live near the city center”, or “I hadn’t worn those glasses in months, so naturally they were dusty.” And unnatural is when the baby doesn’t scream, when the city center is cheap, and when the glasses are pristine. Usually, when something unnatural happens, there’s a good reason.

In most contexts in particle physics and related subjects, surprises — big surprises, anyway — are pretty rare. That means that if you look at a physical system, it usually behaves more or less along lines that, with some experience as a scientist, you’d naturally expect. If it doesn’t, then (experience shows) there’s generally a really good reason… and if that reason isn’t obvious, the unnatural behavior of the system may be pointing you to something profound that you don’t yet know.

For our purposes here, the reason the notion of naturalness is so important is that there are two big surprises in nature that we particle physicists and our friends have to confront. The first is that the cosmological constant [often referred to as “dark `energy’ ” in public settings] is amazingly small, compared to what you’d naturally expect. The second is that the hierarchy between the strength of gravity and the strengths of the other forces is amazingly big, compared to what you’d expect.

The second one can be restated as follows: the Standard Model (combined with Einstein’s theory of gravity) — the set of equations we use to predict the behavior of all the known elementary particles and all the known forces — is a profoundly, enormously, spectacularly unnatural theory. There’s only one aspect of physics — perhaps only one aspect in all of science — that is more unnatural than the Standard Model, and that’s the cosmological constant.

The Notion of “Natural” and “Unnatural”

I think the concept of naturalness is best illuminated by a bit of story-telling.

A couple of friends of mine from college (I’ll call them Ann and Steve) got married, and now have two teenage children. Back when their kids were younger — say, 4 and 7 years old — they were pretty wild. They often played rough, got mad at each other, threw things, and generally needed at lot of supervision.

One day, Ann bought some beautiful flowers and put them in her favorite glass vase. But before she put the vase on the kitchen table, the doorbell rang. She ran to the front, carrying the vase, and as she made her way to the door, she absent-mindedly put the vase down on the small, rickety table that sits by the wall of the kids’ play room.

Half an hour later, Steve returned home with the kids, and sent them into the play room to occupy themselves while he and Ann settled in from the day and prepared dinner. They heard the usual sounds: bumps and crashes, the sounds of bouncing balls and falling blocks, yells of “no fair” and “ow! stop that!”, a moment of screaming that blissfully stopped almost as soon as it started…

It was forty-five minutes later when Ann noticed the vase with the flowers wasn’t on the kitchen table. After a moment searching the kitchen and dining room, she suddenly realized that she’d put it down and forgotten it in the most dangerous place in the house.

So she went running into the play room, hoping she wasn’t too late. And what do you think she found when she opened the door?

Guess. You get three options (Figure 1). Choose the most plausible.

- The vase was exactly where she’d left it, comfortably placed at the center of the table.

- The vase was smashed, and the flowers crushed, down on the floor.

- The vase was hanging off the table, right at the edge, within a millimeter of disaster.

Well, the answer is #3. There it was, just hanging there.

Somehow I suspect you don’t believe me. Or at least, if you do believe me, you probably are assuming there must be some complicated explanation that I’m about to give you as to how this happened. It can’t possibly be that two young kids were playing wildly in the room and somehow managed to get the vase into this extremely precarious position just by accident, can it? For the vase to end up just so — not firmly on the table, not falling off the table, but just in between — that’s … that’s not natural!

There must (mustn’t there?) be an explanation.

Maybe there was glue on the side of the table and the vase stuck to it before falling off? Maybe one of the kids was hiding behind the table and holding the vase there as a practical joke on his mom? Maybe her husband had somehow tied a string around the vase and attached it to the table, or to the ceiling, so that the vase couldn’t fall off? Maybe the table and vase are both magnetized somehow…?

Something so unnatural as that can’t just end up that way on its own… especially not in a room with two young children playing rough and throwing things around.

The Unnatural Nature of the Standard Model

Well. Now let’s turn to the Standard Model, combined with Einstein’s theory of gravity.

I want you to imagine a universe much like our own, described by a complete set of equations — a “theory”, in theoretical-physics speak — much like the Standard Model (plus gravity). To keep things simple, let’s say this universe even has all the same elementary particles and forces as our own. The only difference is that the strengths of the forces, and the strengths with which the Higgs field interacts with other known particles and with itself (which in the end determines how much mass the known particles have) are a little bit different, say by 1%, or 5%, or maybe even up to 50%. In fact, let’s imagine ALL such universes… all universes described by Standard Model-like equations in which the strengths with which all the fields and particles interact with each other are changed by up to 50%. What will the worlds described by these slightly different equations (shown in a nice big pile in Figure 2) be like?

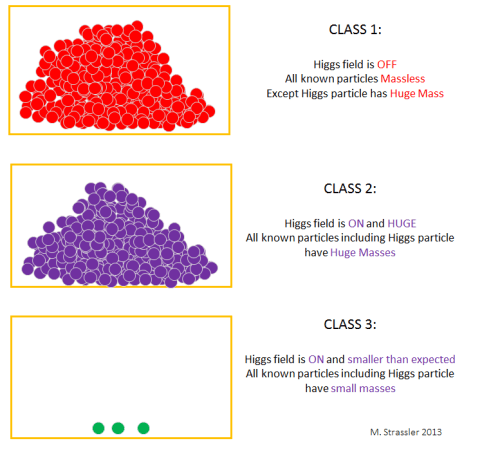

Among those imaginary worlds, we will find three general classes, with the following properties.

- In one class, the Higgs field’s average value will be zero; in other words, the Higgs field is OFF. In these worlds, the Higgs particle will have a mass as much as ten thousand trillion (10,000,000,000,000,000) times larger than it does in our world. All the other known elementary particles will be massless (up to small caveats I’ll explain elsewhere). In particular, the electron will be massless, and there will be no atoms in these worlds.

- In a second class, the Higgs field is FULL ON. The Higgs field’s average value, and the Higgs particle’s mass, and the mass of all known particles, will be as much as ten thousand trillion (10,000,000,000,000,000) times larger than they are in our universe. In such a world, there will again be nothing like the atoms or the large objects we’re used to. For instance, nothing large like a star or planet can form without collapsing and forming a black hole.

- In a third class, the Higgs field is JUST BARELY ON. It’s average value is roughly as small as in our world — maybe a few times larger or smaller, but comparable. The masses of the known particles, while somewhat different from what they are in our world, at least won’t be wildly different. And none of the types of particles that have mass in our own world will be massless. In some of those worlds there can even be atoms and planets and other types of structure. In others, there may be exotic things we’re not used to. But at least a few basic features of such worlds will be recognizable to us.

Now: what fraction of these worlds are in class 3? Among all the Standard Model-like theories that we’re considering, what fraction will resemble ours at least a little bit?

The answer? A ridiculously, absurdly tiny fraction of them (Figure 3). If you chose a universe at random from among our set of Standard Model-like worlds, the chance that it would look vaguely like our universe would be spectacularly smaller than the chance that you would put a vase down carelessly on a table and end up putting it right on the edge of disaster, just by accident.

In other words, if (and it’s a big “if”) the Standard Model (plus gravity) describes everything that exists in our world, then among all possible worlds, we live in an extraordinarily unusual one — one that is as unnatural as a vase nudged to within an atom’s breadth of falling off the table. Classes 1 and 2 of universes are natural — generic — typical; most Standard Model-like theories would give universes in one of those classes. Class 3, of which our universe is an example, includes the possible worlds that are extremely non-generic, non-typical, unnatural. That we should live in such an unusual universe — especially since we live, quite naturally, on a rather ordinary planet orbiting a rather ordinary star in a rather ordinary galaxy — is unexpected, shocking, bizarre. And it is deserving, just like the weirdly placed vase, of an explanation. One certainly has to suspect there might be a subtle mechanism, something about the universe that we don’t yet know, that permits our universe to naturally be one that can live on the edge.

And what is the analogy to the playing children who endanger the vase, and make its balanced condition especially implausible? It is quantum mechanics itself — the very basic operating principles of our world. Quantum effects do not coexist well with accidental, unstable balance.

I’ll go on to discuss those quantum effects, and how they make the Standard Model unnatural, in a moment. But first, although I hope you liked my story, I should point out there’s one important difference between the vase on the table and the universe. If somebody bumps the table or the vase, it will probably fall off, or perhaps, if we’re lucky, slide toward the center of the table. In other words, it can easily move away from its precarious position if it is disturbed. Our universe, by contrast, is not in danger currently of smoothly shifting its properties, and becoming a universe in Class 1 or Class 2. [While it is possible that someday it could shift suddenly to become a very different universe, through a process known as tunneling or vacuum decay, this event is likely to be unimaginably far off; this is a subject for another day, but it’s not something to worry about.] The real issue for the universe is in the past: how, among the vast number of possible universes, did we end up in such an apparently unnatural one? Is there something about our universe that we don’t yet know which makes it not as unnatural as it seems? Or perhaps the fact that many (most?) natural universes don’t seem hospitable for life has something to do with it? Or maybe we humans haven’t been clever enough yet, and there some other subtle scientific explanation? Whatever the reason, either it is due to a timeless fact or due to something that happened very long ago; the universe (or at least the large region we can see with our eyes and telescopes) has been unchangingly unnatural [if the Standard Model fully describes it] for billions of years, and won’t be changing anytime in the near future.

In any case, let’s move on now, to understand the quantum physics that makes a universe described by the Standard Model (and gravity) so incredibly unusual.

Quantum Physics and (un)Naturalness

At this point, please read about quantum fluctuations of quantum fields, and the energy carried in those fluctuations, if you haven’t already done so. Along the way you’ll find out a little about another naturalness problem: the cosmological constant. After you’ve read that article, you can continue with this one.

Back to the Higgs (and Other Similar Particles)

Quantum fluctuations of fields, and their contribution to the energy density of empty space (the so-called “vacuum energy”) play a big part in our story. But our goal here requires we set the cosmological constant problem aside, and focus on the Higgs particle and on why the Standard Model is unnatural. This is not because the cosmological constant problem isn’t important, and not because we’re totally certain the two problems are completely unrelated. But since the cosmological constant has everything to do with gravity, while the problem of the Higgs particle and the naturalness of the Standard Model doesn’t have anything to do with gravity directly, it’s quite possible they’re solved in different ways. And each of the two problems is enormous on its own; if in fact we need to solve them simultaneously, then the situation just gets worse. So let’s just send the cosmological constant to a far corner to take a little nap. We do need to remember that it’s the elephant in the room that we can’t forever ignore.

Ok — about the Higgs field. There are three really important questions about the Higgs field and particle that we want to answer. [I’ll phrase all these questions assuming the Standard Model is right, or close to right, but if it isn’t, don’t worry: the ideas I’ll explore remain essentially the same, even though slightly different phrasing is required.]

- The Higgs field is “ON” — its average value, everywhere and at all times, at least since the very early universe, isn’t zero. Why is it on?

- Its average value is 246 GeV. What sets its value?

- The Higgs particle has a mass of about 125 GeV/c². What sets this mass?

I’m going to explain to you how and why these questions are related to the issue of how the energy of empty space (part of which comes from quantum fluctuations of fields) depends on the Higgs field’s average value.

The Higgs Field’s Value and the Energy of Empty Space

For any field — not just the Higgs field — how is it determined what the average value of the field is in our universe? Answer: a field’s average value must have the following property: if you change the value by a little bit, larger or smaller, then the energy in empty space must increase. In short, the field must have a value for which the energy of empty space is at a minimum — not necessarily the minimum, but a minimum. (If there is more than one minimum, than which one is selected may depend on the history of the universe, or on other more subtle considerations I won’t go into now.)

A couple of illustrative examples of how the energy of empty space in our universe, or in some imaginary universe, might depend on the Higgs field, or on some other similar field, are shown in Figure 4. In each of the two cases I’ve drawn, there happen to be two minima where the Higgs field could sit — but that’s just chance. In other cases there could be several minima, or just one. The fact that the Higgs field is ON in our world implies there’s a minimum in the universe’s vacuum energy when the Higgs field has a value of 246 GeV. While it’s not obvious from what’s I’ve said so far, we are confident, from what we know about nature and about our equations, that there is no minimum when the Higgs field is zero, and that’s why our universe’s Higgs field isn’t OFF. So in our universe, the dependence of the vacuum energy on the Higgs field probably looks more like the left-hand figure than the right-hand one, but, as we’ll see, it may not look much like either of them. If the Standard Model describes physics at energies much above and at distances much shorter than the ones we’re studying now at the Large Hadron Collider (LHC), then the form of the corresponding curve is much more peculiar — as we’ll see later.

The Higgs Particle’s Mass and the Energy of Empty Space

What about the Higgs particle’s mass? It is determined (Figure 4) by how quickly the energy of empty space changes as you vary the Higgs field’s value away from where it prefers to be. Why?

A Higgs particle is a little ripple in the Higgs field — i.e., as a Higgs particle passes by, the Higgs field has to change a little bit, becoming in turn a bit larger and smaller. Well, since we know the Higgs field’s average value sits at a minimum of the energy of empty space, any small change in that value slightly increases that overall energy a little bit. This extra bit of energy is [actually half of] what gives the Higgs particle its mass-energy (i.e., it’s E=mc² energy.) If the shape of the curve is very flat near the minimum (see Figure 4), the energy required to make a Higgs particle is rather small, because the extra energy in the rippling Higgs field (i.e., in the Higgs particle) is small. But if the shape of the curve is very sharp near the minimum, then the Higgs particle has a big mass.

Thus it is the flatness or sharpness in the curve in the plot, at the point where the Higgs field’s value is sitting — the “curvature at the minimum” — that determines the Higgs particle’s mass.

Why It Isn’t Easy to Have The Higgs Particle’s Mass Be Small

The Higgs particle’s mass is measured to be about 125-126 GeV/c², about 134 times the proton‘s mass. Now why can’t we just put that mass into our equations, and be done with this question about where it all comes from?

The problem is that the Higgs field’s value, and the Higgs particle’s mass, aren’t things you put directly into the equations that we use; instead, you extract them, by a complex calculation, from the equations we use. And here we run into some difficulty…

We get these two quantities — the average value and the mass of the field and particle — by looking at how the energy of empty space depends on the Higgs field. And that energy, as in any quantum field theory like the Standard Model, is a sum of many different things:

- energy from the fluctuations of the Higgs field itself

- energy from the fluctuations of the top quark field

- energy from the fluctuations of the W field

- energy from the fluctuations of the Z field

- energy from the fluctuations of the bottom quark field

- energy from the fluctuations of the tau lepton field

- …

and so on for all the fields of nature that interact directly with the Higgs field… I’ve indicated these — schematically! these are not the actual energies — as blue curves in Figure 5. Each plot indicates one contribution to the energy of empty space, and how it varies as the Higgs field’s average value changes from zero to the maximum value that I dare consider, which I’ve called vmax.

[Note: Some of you may have read that these calculations of the energy of empty space give infinite results. This is true and yet irrelevant; it is a technicality, true only if you assume vmax is infinitely large — which it patently is not. I have found that many people, non-scientists and scientists alike, believe (thanks to books by non-experts and by the previous generations of experts — even Feynman himself), that these infinities are important and relevant to the discussion of naturalness. This is false. We’ll return to this widespread misunderstanding, which involves mistaking mathematical technicalities for physically important effects, at the end of this section.]

What is vmax? It’s as far as one could can push up the Higgs field’s value and still believe our calculations within the Standard Model. What I mean by vmax is that if the Higgs field’s value were larger than this (which would make the top quark’s mass larger than about vmax/c2) then the Standard Model would no longer accurately describe everything that happens in particle physics. In other words, vmax is the boundary between where the Standard Model is applicable and where it isn’t.

However, we don’t know what vmax is… and that ignorance is going to play a role in the discussion. From what we know from the LHC, vmax appears to be something like 500 GeV or larger. However, for all we know, vmax could be as much as 10,000,000,000,000,000 times larger than that. We can’t go beyond that point, because that’s the (maximum possible) scale at which gravity becomes important; if vmax were that large, top quarks would be so heavy they’d be tiny black holes! and we know that the Standard Model can’t describe that kind of phenomenon. A quantum mechanics version of gravity has to be invoked at that point… if not before!

So again, what we know is that vmax is somewhere between 500 GeV and 1,000,000,000,000,000,000 GeV or so. In Figure 5, I’ve assumed it’s quite a bit bigger than 500 GeV; we’ll look in Figure 6 at the case where vmax is close to 500 GeV.

Each one of the contributions in the upper row of Figure 5 is something we can (in principle, and to a large extent in practice) calculate, for any Higgs field value between zero and vmax, and for all quantum fluctuations with energy less than about vmax. [I’m oversimplifying somewhat here; really this energy Emax need not be quite the same as vmax, but let’s not get more complicated than necessary.] If vmax is big, then each one of these contributions is really big — and more importantly, the variation as we change the Higgs field’s value from zero to vmax is big too — something like vmax4/(hc)3 … where h is Planck’s quantum constant and c is the universal speed limit, often called “the speed of light”.

But that’s not all. To this we have to add other contributions, shown in the second row of Figure 5, which come from physical phenomena that we don’t yet know anything or much about, physics that does not directly appear in the Standard Model at all. [Technically, we absorb these effects from unknown physics into parameters that define the Standard Model’s equations, as inputs to those equations; but they are inputs, rather than something we calculate, precisely because they’re from unknown sources.] In addition to effects from quantum fluctuations of known fields with even higher energies, there may also be effects from

- the quantum mechanics of gravity,

- heavy particles we’ve not yet discovered,

- forces that are only important at distances far shorter than we can currently measure,

- other more exotic contributions from, say, strings or D-branes in string theory or some other theory like it,

- etc.,

some of which may depend, directly or indirectly, on the Higgs field’s value. I’ve drawn these unknown effects in red; note that these curves are pure guesswork. We don’t know anything about these effects except that they could exist (and the gravity effects definitely exist), and that some or all of them could be really big… as big as or bigger than the ones we know about in the upper row. In principle, all these unknown effects could be zero — but that wouldn’t resolve the naturalness problem, as we’ll see, so presumably they’re not all zero.

What’s crucial here is that there’s no obvious reason to expect these unknown effects in red are in any way connected with the known contributions in blue. After all, why should quantum gravity effects, or some new force that has nothing to do with the weak nuclear force, have anything to do with the energy density of quantum fluctuations of the top quark field or of the W field? These seem like conceptually separate sources of the energy density of empty space.

And here’s the puzzle. When we add up all of these contributions to the energy of empty space [Unsure how to add curves like these together? Click here for an explanation…] — each of which is big and many of which vary a lot as the Higgs field’s value changes from zero to the maximum that we can consider — we find an incredibly flat curve, the one shown in green. It’s almost perfectly flat near the vertical axis. And yet, its minimum is not quite at zero Higgs field; it’s slightly away from zero, at a Higgs field value of 246 GeV. All of those different contributions in blue and red, which curve up and down in varying degrees, have almost (but not quite) perfectly canceled each other when added together. It’s as though you piled a few mountains from Montana into a deep valley in California and ended up with a plain as flat as Kansas. How did that happen?

Well, how bad is this problem? How surprising is this cancellation? The answer is that it depends on vmax. If vmax is only 500 GeV, then there’s no real cancellation needed at all — see Figure 6. But if vmax is huge, the cancellation is incredibly precise, as in Figure 5. The larger is vmax, the more remarkable it is that all the contributions cancelled.

How remarkable? The cancellation has to be perfect to something like one part in (vmax/500 GeV)2, give or take a few. So if vmax is close to 500 GeV, that’s no big deal; but if vmax = 5000 GeV, we need a cancellation to one part in 100. If it’s 500,000 GeV, we need cancellation to one part in a million.

And if we take vmax as high as possible — if the Standard Model describes all non-gravitational particle physics — then we need cancellation of all these different effects to one part in about 1,000,000,000,000,000,000,000,000,000,000.

In the last case, the incredible delicacy of the cancellation is particularly disturbing. It means that if you could alter the W particle’s mass, or the strength of the electromagnetic force, by a tiny amount — say, one part in a million million — the cancellation would completely fail, and you’d find the theory would be in Class 1 or Class 2, with a ultra-heavy Higgs particle and either a large or absent Higgs field value (see Figure 3). This incredible sensitivity means that the properties of our world have to be, very precisely, just so — like a radio that is set exactly to the frequency of a desired radio station, finely tuned. Such extreme “fine-tuning” of the properties of a physical system has no precedent in science.

To say this another way: what’s unnatural about the Standard Model — specifically, about the Standard Model being valid up to the scale vmax, if vmax is much larger than 500 GeV or so — is the cancellation shown in Figure 5. It’s not generic or typical… and the larger is vmax, the more unnatural it is. If you take a bunch of generic curves like those in Figure 5, each of which has minima and maxima at Higgs field values that are either at zero or somewhere around vmax, and you add those curves together, you will find that the sum of those curves is a curve that also has its minima and maxima at

- a substantial fraction of vmax [Class 2 theories — see Figure 3],

- or at zero [Class 1 theories],

- but not somewhere non-zero that is much much smaller than vmax [Class 3 theories].

Moreover, if the curves are substantially curved near their minima and maxima, their sum will also typically have substantial curvature near their minima and maxima [i.e. the Higgs particle’s mass will be roughly vmax/c2, as in Class 1 and Class 2 theories], and won’t be extremely flat near any of its minimum [needed for the Higgs particle to be much lighter than vmax/c2, as occurs in Class 3 theories.] This is illustrated, for the addition of just two curves, in Figure 7, where we see the two curves have to have a very special relationship if their sum is to end up very flat.

That’s the naturalness problem. It’s not just that the green curve in Figure 5 is remarkably flat, with a minimum at a small Higgs field value. It’s that this curve is an output, a sum of many large and apparently unrelated contributions, and it’s not at all obvious how the sum of all those curves comes out to have such an unusual shape.

An Aside About Infinities, Renormalization, and Cut-offs

[You can skip this little section if you want; you won’t need to understand it to follow the rest of the article.]

Now, about those infinities that you may have read about — along with the scary-sounding word “renormalization”, in which infinities seem to be somehow swept under the rug, leading to finite predictions. These infinities, and their removal via renormalization, sometimes lead people — even scientists — to claim that particle physicists don’t know what they are doing, and that this causes them to see a naturalness problem where none exists.

Such claims are badly misguided. These technical issues (which are well understood nowadays, in any case) are completely irrelevant in the present context.

The infinities that arise in certain calculations of the Higgs particle’s mass, and of the Higgs field’s value, are a symptom of the naturalness problem, a mathematical symptom that shows up if you insist on taking vmax to infinity, which, though often convenient, is an unphysical thing to do. The infinities are not the naturalness problem, nor are they at its heart, nor are they its cause.

Among many ways to see this, one very easy way is to study the wide variety of finite quantum field theories discovered in the 1980s (a list of references can be found in an old paper of mine with Rob Leigh [now a professor at the University of Illinois].) These theories have minimal amounts of supersymmetry, as well as being finite. If you take such a theory (see Figure 8), and you ruin the supersymmetry at a scale vmax, while assuring the theory that remains at lower energies still has spin-zero fields like the Higgs field, you do not introduce any infinities. Moreover, there is no need to artificially cut the theory off at energies below vmax (as I have done in Figure 5, separating known from unknown) since in this example we know the equations to use at energies above as well as below vmax. The energy of empty space, and its dependence on the various fields, can be calculated without any ambiguity, infinities, or infinite renormalization. So — is there a naturalness problem here too? Do the spin-zero particles generically get masses as big as vmax/c2? Do the spin-zero fields have values that are either zero or roughly as big as vmax? You Bet! No infinities, no sweeping anything under a rug, no artificial-looking cutoffs — and a naturalness problem that’s just as bad as ever.

By the way, there’s an interesting loophole to this argument, using a lesson learned from string theory about quantum field theory. But though it gives examples of theories that evade the naturalness problem, neither I nor anyone else was able (so far) to use it to really solve the naturalness problem of the Standard Model in a concrete way. Perhaps the best attempt was this one.

We could also repeat this type of calculation within string theory (a technical exercise, which does not require we assume string theory really describes nature). String theory calculations have no infinities. But if vmax, the energy scale where the Standard Model fails to work, is much larger than 500 GeV, the naturalness problem is just as bad as before.

In short: getting rid of the infinities that arise in certain Higgs-related calculations does NOT by itself solve or affect the naturalness problem.

Solutions to the Naturalness Problem

On purely logical grounds, a couple of qualitatively different types of solutions to this problem come to mind. [To be continued…]

208 Responses

Referring to Figure 3, what if a universe in Class 3 outperforms the other two classes in some way, such as lasting longer, reaching a bigger size faster, having fewer catastrophes, or whatever. This would be Fine Tuning in the only way that makes sense — a Darwinian selection.

You could also get the cosmological constant out of this. There is only one universe, and it is discrete at the Planck scale. The Planck-size cells that comprise space are the reproducing and evolving “entities”. What appears to be a cosmological constant is simply their rate of growth.

It could be selection, but it is not “Darwinian” (not a population of replicators that diverge from common descent).

Humans have ‘naturally’ thought to live on one flat chunk of map for years, then one unique round ball, then one unique solar system, one unique galaxy, one unique universe.. What’s the difficulty with questioning the notion of our uniqueness?

Unlikely is not unnatural, simply unlikely. Invoking the word ‘naturalness’ is like me invoking ‘the gods’ when my coin flip lands on its rim (has happened once, was fun).

I’m happy to find your blog: As an Asperger (visual thinker) I find myself trying to explain to “word-people” that the brain has the capability of processing information in various languages and that individuals can favor using one or more. I became a geologist because I’m almost entirely a visual processor, despite having verbal skills. Math? Yikes! It’s a language I wish I understood, but abstract thinking is difficult. Your posts do an excellent job of explaining distinctions between terms and concepts which I can grasp as structure and patterns. I posted a link to your blog for Aspergers like me. Thanks! http://aspergerhuman.wordpress.com

This is great stuff, thanks. I’m a high school teacher and convinced that I have to work this into my curriculum.

Thanks for this nice article.

There is something that still eludes me. Hope you may help.

I did not quite get how do we know that all the contributions, as in Figure 5 add to the green curve (bottom right).

Here you say “What We Observe About the Higgs Field and Particle.”

I imagine this is not the result of a calculation within the SM, otherwise, why do we need to include SUSY?

Is this a fact we know from measurements? Have we observed the energy density, or the Higgs’ mass,

to be flat regardless of the momentum cut-off?

I read in Wikipedia that “As of November 2006, the Standard Model doesn’t work”. This could explain why we have these problems. Personally, I don’t believe in quarks: protons and neutrons are made up of electron, positrons, and neutrinos. Other particles such as the pions, decay into positrons, electrons, and photons which subsequently split into electron-positron pairs. This implies that protons ARE made of positrons and electrons and possibly neutrinos.

This would explain how radioactive nuclides can capture atomic electrons, emit beta minus and beta plus – these particles are already in the nuclides (except the captured electron which has a home in the nucleus). Shouldn’t the unstable particles and the quarks be removed from the Standard Model table and the whole shebang rewritten? This may solve hierarchy and unnaturalness problems and maybe the cosmological constant problem.

What do you think?

Thanks for this very informative article. I am eagerly awaiting your take on possible solutions to the “natural” problem. There is an interesting paper by Moffat that might be worth a look, given that Natural SUSY may not be found.

http://arxiv.org/abs/hep-th/0610162

Hi, i believe that i noticed yoou visite my web site thus i got here

to return the favor?.I am trying to in finding issues to enhance my web site!I assume its adequate to make

uuse of a few off yoir ideas!!

Dear Sir,

I am a novice. I have a basic question.

From what i have understood:

Quantum fields were present in some empty space. Then quantum fluctuations occurred and created energy. Then E=mc^2 starts to function. Inflation occurs. And the rest is history.

But how empty space came into existence at first place?

And how quantum fields came into existence at first place?

Regards.

We manufacture and export physics lab equipment / instruments for school, college and teaching laboratory since 1954. We are based in Ambala.clic more

I don’t know whether it’s just me or if perhaps everyone else encountering problems with

your website. It seems like some of the text on your content

are running off the screen. Can someone else please comment and let me know if this is happening to

them as well? This might be a problem with my web browser because

I’ve had this happen previously. Many thanks

Thanks for the fine article.

I disagree on one minor point. In mentioning this fine-tuning of the Higgs field or Higgs boson to one part in

1,000,000,000,000,000,000,000,000,000,000 (to use your number), you say, “Such extreme “fine-tuning” of the properties of a physical system has no precedent in science.”

But no, there seem to be at least two other similar cases of equally amazing “fine-tuning” :

(1) Fine-tuning of the cosmological constant (vacuum energy density) to one part in 10 to the 120th power (quantum field theory gives us an expected value 10 to the 120th power higher than the highest value compatible with supernova observations).

(2) Fine-tuning of the proton charge and electron charge, which match to 1 part in 10 to the 20th power, something unexplained by the standard model.

So this Higgs field fine tuning seems to be not at all a unique case in nature.

Regarding (1) — I do discuss this, extensively, elsewhere. But the problem with the cosmological constant is that it too is unexplained. We do not have a proof, as yet, that its small value is due to fine-tuning, so we can’t give it as an example of fine-tuning, only one of possible fine-tuning. Still, I perhaps should have clarified the wording.

Regarding (2) — interesting point, but I don’t think this is fine-tuning either. In many extensions of the Standard Model, anomaly cancellations fix this ratio to be exactly 1. If there were only one generation of fermions, the anomalies would fix the ratio precisely. Charges can’t vary; they don’t get quantum corrections. So if a symmetry or geometrical relationship (like anomaly cancellation) fixes the ratio to be 1, it will be forever 1, no matter what happens. This is not true for the Higgs particle’s mass, which depends, for instance, on the values of all scalar fields in the universe and on every parameter in the field theory.

“[Note: Some of you may have read that these calculations of the energy of empty space give infinite results. This is true and yet irrelevant; it is a technicality, true only if you assume vmax is infinitely large — which it patently is not. I have found that many people, non-scientists and scientists alike, believe (thanks to books by non-experts and by the previous generations of experts — even Feynman himself), that these infinities are important and relevant to the discussion of naturalness. This is false. We’ll return to this widespread misunderstanding, which involves mistaking mathematical technicalities for physically important effects, at the end of this section.]”

I get the impression that here (and in the section it refers to) that you’re going for a slightly different audience than the rest of the piece, namely the grad-student-just-out-of-QFT perspective. If that is what you’re going for, I think the section doesn’t really address the principal source of confusion. You point out that finite theories still have hierarchies, but I think the greater source of confusion is why infinite theories are pathological at all. When students are first introduced to renormalization (especially via stuff from the previous generation of experts) it’s often stated that the infinities are actual infinities, which are then simply included in the bare values of the relevant constants. Essentially, this is the “why not just use dimensional regularization?” confusion, which often makes people new to the subject fail to understand why divergences are a problem in the first place. I don’t know if this would be too far from your primary audience, but you might want to briefly address this particular source of confusion.

Did you still feel that way regarding the second attempt to address this issue at the end of the article?

That’s the section I was referring to, actually. You mostly emphasize that the issue of infinities is a technical one, and that finite theories also have naturalness problems. That shows the issue of infinities and the issue of naturalness are distinct, but if someone started out the section wondering why we can’t just set vmax to infinity/use dim reg, that wouldn’t dissuade them.

I suppose in a way, the counter to that is earlier in the article, in that even if you took infinite vmax seriously you’d just need infinite fine-tuning and thus would be infinitely unnatural. While the structure buries this a little since you dismiss infinite vmax early on, your current structure works better for the majority of readers. So on reflection I don’t think there’s anything you need to add, besides maybe a quick note for those who might take vmax=infinity seriously.

Yes, in the end, if a young expert-in-training doesn’t understand this point even after what I’ve written, he or she is going to have to go through the exercise: take a theory that has even the littlest bit of physics beyond the Standard Model, even just one heavy fermion with a large mass M and a small Higgs boson coupling y; use dim-reg or anything else to get rid of the infinities; and look at how the Higgs mass-squared depends on M. That fermion comes in and blows everything to pieces.

Sincere advice : please read what any unbiased mind would reach in the pingback link @ Un-Nauralness or miracle , I do respect the writer very much for saying what many refuse to see…….thanks Dr .D

What is the explanation of the most (un-natural) aspect of space , that is , existing of all quantum fields in a state of complete interconnection occupying same space without resulting in a global universal shortcircuit of all possible interactions simultaneously rendering the universe in a state of ultimate chaos ?

Professor Strassler : In my stand based on many studies and comparisons , the un-naturalness aspect and the hierarchy problems are in reality features of the supreme harmony of our universe where the problemness is in our concepts not in reality.

I assume saying this that you welcome those who agree with you and those who are not convinced , not with the scientific part but with the philosophical part of what you say.

Thanks

Allow me Matt. To say that the origin of naturalness problem is naturalism and the physicists expectations that the universe should follow their criteria , but for the cosmos itself it feels no problem whatsoever having weak scale 16 orders of magnitude less that plank،s scale ………….so follow the universe not the expectation .

All scales of energies and forces in the universe does not follow our expectations of their naturality but are chosen to satisfy the attributes of a viable universe ……….. So where is hierarchy problem then ?

1- Our universe is impossible to exist without un-naturalness or hierarchy

2-Our existence is not the cause of our universe

3-Our universe is not the cause of our existence

4- There are no laws , principles , rules….etc that dictate a similar scale to the energies and forces of nature

Then where is the un-naturalness problem? Is it in the mind of the beholder ?

Ash, please read this article…

http://io9.com/did-the-higgs-boson-discovery-reveal-that-the-universe-512856167

Matt, I see there is a puzzle if you hold the following assumption to be true: “there’s no obvious reason to expect these unknown effects in red are in any way connected with the known contributions in blue.”

But isn´t the cancellation of the different terms, the blue and red ones, some kind of strong hint that there is such a connection? The reason might not be obvious, but doesn´t it look likely (or some might say, even “obvious”) that such a reason must (or could) exist?

Someone brought up an analogy, like: Imagine some thousand pieces of metal, all of different shapes. But when you put them all together, you get a car instead of just a pile of metal. Isn´t that strange? Well, only if you assume the pieces were created independently of each other.

Apart from my question, many thanks for your efforts to explain us this matter.

Markus, I had the same reaction early on in learning about these matters (in which I am still a novice, and a layman). I believe one response often given is that it is not obvious such a reason (for cancellation between opposing terms) must exist, because there is an alternative: a multiverse, where each nucleating bubble has its own physics, and anthropic selection effects lead to the values in our unverse being constrained to come so close to cancelling, with no further explanation necessary or even possible for things taking on the values they do in our observed reality.

This is only half-serious, but I think the analogous reasoning in your example of a car would be: let’s say you are a member of a species that can only exist inside a car. And you live in a universe where thousands of pieces of metal, of random different shapes and sizes, are created from nothing, thrown together randomly and spewed out into space, over and over and over again without end. You find yourself in a car, wondering just how this car got put together and what caused its pieces to fit together just so. It turns out there’s no good reason that would satisfy you, save that you couldn’t have been there in the first place to ask the question in the Vast majority of assemblages–the fact of your existence selects from among these assemblages only those, a Vanishing minority, that allow you to be there.

I sure hope it turns out there’s more of a reason that this though!

In addition it is logically impossible to construct a mathematical system in which the constants of the SM are the variables which solving the system we get their values since then a new set of meta- constants are required to solve the equations which in turn need hyper- system leading to infinite regression.

No Matt. , it is so , without a theory to specify the parameters of nature we can never explain the relation between plank,s mass and W , Z masses , or the ratio of gravity force to other forces …..that is the hierarchy problem as stated by you , the death of M-theory dream killed any hope to solve the problem…….so it is so Matt. ……….it is so my friend.

Now after the collapse of hope to produce the above mentioned unknowns via the M-theory where 10 to the power 500 of ensembles are possible and where all hope to find a theory specifying all cosmic parameters instead of inputting them by hand vanished , even discovering new forces and particles via any rout cannot solve that fundamental problem of finding the parameters generating mechanism , and as such any hope of solving the hierarchy problem may be rendered as a mirage.

Not so. There is no reason for this conclusion. What happened to the M theory dream — which may have nothing to do with the real world — has no bearing on whether the hierarchy problem has a solution.

Not only that , the SM does not specify any constant , does not specify any coupling strength , nor any reason for three generations of particles , nor any hint for any system of force values ……as you said before , it is only an effective theory meaning a tool for us to calculate interactions effects , no more

Matt, where are your articles on the Standard Model (or =partial grand unified theory?) that compare its prediction (by calculation) and experimental data? I heard you saying repeatedly that the SM is spectacularly accurate and that sounds pretty good. but I want to see, check, and calculate its accuracy one(by calculation) to one (by data/experiment/observation) for each (simple) concrete specific physical phenomenon. I also want to know where/how/why the SM breaks down and where(under what conditions(range/scale/applications etc.)) it is robust with specific (simple) examples. For what applications is the SM useful? Is the SM useful for modeling/calculating a behavior of a living cell or a molecular machinery inside a cell? With proper simulations does the SM accurately picture/draw photon – molecule interaction? For example, how a single (or multiple?) photon(s) interact and alter structures of (macro)molecules inside a cell?, or how the molecule emits a photon(s)? Does the SM almost completely accurately describe every single moment of how a photon is absorbed to a molecule during photosynthesis (with where the energy is localized in which fields at every instance)? Could you point me to such simulation models (CG/video simulation etc.)? Or the CG/movie simulations(with real data) by SM which describes/depicts/draws the propagation of a photon in vacuum?

You can find a few hundred things to check if you read

https://twiki.cern.ch/twiki/bin/view/AtlasPublic

https://twiki.cern.ch/twiki/bin/view/CMSPublic/PhysicsResults

http://lhcbproject.web.cern.ch/lhcbproject/CDS/cgi-bin/index.php

http://cds.cern.ch/collection/ALEPH%20Papers?ln=en

http://l3.web.cern.ch/l3/paper/publications.html

http://www-zeus.desy.de/zeus_papers/zeus_papers.html

Those are just some of the results from the last 20 years; there are hundreds more from earlier periods. For example:

http://inspirehep.net/search?ln=en&ln=en&p=collaboration+jade&of=hb&action_search=Search&sf=year&so=a&rm=&rg=100&sc=0

There are no known and confirmed large deviations from the Standard Model, and certainly no glaring ones, within any particle physics experiment. That means: agreement with many tens of thousands, of independent measurements (and if you count each data point as a separate measurement, you’d probably be in the millions). By contrast, if you took a theory which is like the Standard Model but is missing one of the three non-gravitational forces or one of the types of particles that we know about, it would be ruled out by hundreds, even thousands, of experiments.

The only things in nature that are known not to fit within the Standard Model plus Einstein’s gravity are:

1) Dark energy (which you can put into Einstein’s gravity by hand, but presumably that’s too crude)

2) Dark matter (we have ideas on what it might be, but nothing in the Standard Model can do the job)

3) Neutrino masses (which require a small amount of adjustment to the theory — this may be a small issue)

Does this help?

Now, there’s a separate part of your question: for what phenomena is it *useful* to use Standard Model equations, rather than some simplified version. For photons interacting with molecules, trying to use the Standard Model in its full glory would be impossibly difficult. So what do you do?

1) You choose what you want to study (molecules)

2) You take the Standard Model and derive simpler versions of the equations that apply for the study of molecules — giving up details in return for simplicity

3) You then use those simpler equations to study complex molecules and their interaction with photons.

So the trick, and the subtlety, is step 2. You might fail to come up with sufficiently simple equations, so you can’t carry out step 3; or you might oversimplify and then step 3 won’t work. Of course, that’s not a failure of the Standard Model; it’s a failure on scientists’ part to think of a way to apply it to a complex problem.

Needless to say, if you want to check the Standard Model carefully, you check it on simpler problems first! And there it has an incredible track record. And yes, you can derive atomic physics, and the interaction of atoms and light, from the Standard Model, although if the atom is complex you have to rely on computers; and you can go on from there to derive computer techniques for studying complicated molecules, etc.

Matt, yes, what you say sounds consistent (but just listing papers would not be so helpful). So, I want to see this part “Needless to say, if you want to check the Standard Model carefully, you check it on simpler problems first!” Could you demonstrate a few simple(er, st) cases (readable/understandable for freshman level physics) for 1)SM works well and 2)SM fails? If you do not have time just one good demonstration (comparing “calculation” and “experimental data”) for each (1 and 2) are fine. How about, how the SM draws/simulates a propagation of a photon in vacuum? I hope it is simple enough so that it is easy to generate such animated CG movies in 3D coordinate with time change. I want to see how a photon radiates/moves/propagates (how photon field behave) in 3D CG as time ticks with all the known field values visibly fluctuating (perhaps with vector and color density representations etc.) in real time. Or, 3D video representations(=accurate simulation by SM) of quantum fluctuation in vacuum itself would be nice (if possible). Or, how electron field and photon field (with all the known calculable/observable parameters like field direction, energy/wave localization/shape, spin direction of the photon and the electron etc.) behave when a photon is emitted (or absorbed to) from an electron in 3D CG movie with time.

As you may noticed , I am always looking into the global not the partial , so , is it safe -for resting of the mind – to say as a global absolute fact that the mighty forces of OUR cosmos are balanced in a truly unexpected razor edge equilibrium ?

Please ; yes , no , we don’t know . Thanks.

We cannot make statements yet about our universe — only about the theory known as the Standard Model (plus gravity) which may or may not describe our universe. Remember: we don’t know v_max, and we don’t know yet that it isn’t 500 GeV, with discoveries at the LHC just around the corner.

Execuse me Matt. , I am confused ; is nature un-natural as it is or as we see it or as some of us see it or as you see it ?

I mean is un-naturalness relative or absolute or in the category ( maybe , perhaps , it could have been ……….)

I need a clear cut answer , isn’t that science ?

The Standard Model is unnatural as quantum field theory experts see it. That is: the Standard Model is a quantum field theory (and quantum field theory applies also to many other systems in nature, mainly those in solid-state physics.) Examination of the physical systems to which quantum field theory applies suggests that our understanding of how quantum field theory works is excellent. Among all those systems, particle-like ripples are common, and there are some that are Higgs-like. If you look at all the other systems, you never find a light Higgs-like ripple with a mass-energy mc^2 far less than the energy scale at which you find other particles and forces associated with that ripple… or more precisely, the few cases where you do find it are very well-understood, and the principles that apply in those cases don’t apply to the Standard Model. All of this suggests strongly that our understanding of quantum field theory in general, and the naturalness issue in particular, is correct.

Nature, on the other hand, is not yet known to be unnatural, because we don’t know v_max yet. Only if we can show, using the LHC and other experiments, that v_max is much larger than 500 GeV will be able to conclude that nature itself is, in this sense, unnatural.

Maybe the deep grand secret of nature is that it is so constructed that the mind can describe it with many mathematical structures , every one of which matches data and able to predict true predictions , that is fantastic interaction between Mind and Nature , it is the interaction that cannot be ignored anymore .

It is also what we see in ontological aspects of scientific theories.

Correlation with data does mean identity with ontology , plus if we take the agreed upon fact of underdetermination of theories by data can’t we recognize that maybe ontology in its most fundamental primary aspect is something totally different than field activities with virtual particles no matter how many experiments match our purpose tailored data-match designed theories ?

_”….imagine, for example, that you had a car that could go 500 mph, and had an engine that was powerful enough to go that fast in the absence of friction. Wouldn’t you be surprised if it turned out that the engine and friction balanced when the car was going 0.0001 mph? Our problem is vaguely akin to this.

_”It isn’t just an issue of whether the correlation is known; the issue is whether it is a pure accident, or whether there’s a reason.”

Please. excuse my bombastic replies to Larson wherein I included all physicists and launched a seaming attack on Standard Model. I think I know what you are saying, but your article is far too technical for my understanding of physics. I merely wish to understand the principles behind these concepts and can not completely subscribe to SM theory because of the singularity problem and the BB theory. SM works by and large and is proven with tests but it makes the universe appear unnatural because, as you explained to “zbynek” the universe is calibrated to include higher energies in Higgs field when it works perfectly well at the much lower energies. No, there must be a reason for that. Universe must have more fields operating on it. Perhaps another force or body of energy that operates on the space. I know you don’t have time for other people’s ideas on what could be out there but just suppose that universe is not a bubble floating in hyperspace, that it isn’t a brane either but just an empty space encompassing a real source of unimaginable energy. Suppose that energy is seated in the center of our universe and our universe is orbiting this source. Say that we are at a safe distance from the radiation of this source, a goldilocks zone but closer and further distances exist. This could be that margin of possibilities that some physicists see as multiverse potential. Ok, that’s my two pennies worth of contribution to this topic and discussion. I expect physics will have to readapt to new concepts, because the old one is ready to be discussed. Tabues in physics are preventing fine scientist like you from exploring other possibilities. May be with the next generation of scientists, things will begin to move on. No, don’t get me wrong, standard model could be the closest thing to reality of this universe, a real huge step forward, but it must not stagnate. I’m truly mesmerized with you taking so openly to a general audience that has no knowledge or very little knowledge of physics. Thank you for that.

“I can not completely subscribe to SM theory because of the singularity problem and the BB theory”

The Standard Model describes all particles and forces *except* gravity. The singularity issue (which we don’t even know is an issue) has nothing to do with the Standard Model. The Big Bang theory (which is in excellent agreement with measurements — I don’t know why you dislike it, given how fantastically well it works) involves both gravity and the Standard Model. If you don’t like the Big Bang theory, you probably dislike the gravity part of it, and not the Standard Model anyway. As for the Standard Model alone, if you don’t like it, you have some explaining to do: it works for hundreds of measurements. It may not be the whole story — indeed it is unlikely to be the whole story — even for non-gravitational physics. But it works extremely well.

Your impression that physicists are wedded to the conventional wisdom and close-minded is simply wrong. When I go to conferences, there are always many talks that go beyond the conventional wisdom. The particular suggestion that you made (or something similar) has certainly been considered. But the problem is that there are dozens and dozens of such suggestions, many far more radical than what you suggest. Almost all of them will turn out to be wrong; I could fill this blog with all the crazy false ideas that I and my colleagues have had, and it would make this blog impossibly confusing. (Other bloggers approach this issue differently.) I tend to report the mainstream, and especially the best-established part of the mainstream; I think that is the best way to explain what we think we know. And of course, some of what is mainstream now will be discarded someday; that’s certain. But we don’t know which parts will be discarded, or why… so we have no choice but to be patient, work hard, and wait for experimental or theoretical insights to push us in the right direction.

Thanks Matt, for giving it to me straight. I’m at great odds coming against you, for if I ask why can’t SM explain gravity, you’d just say because we haven’t discovered gravitons yet. (I could be wrong in my assumptions). Something is truly a puzzlement here, with the theory of particles. We have various fields. Every particle comes as a ripple of that field. You say, fermions have zero energy fields. To me this means that particle mops up the pre-existing field, if particles are ripples in a field. So, if not out of Higgs field then from a composite field of EM and nuclear (strong and weak) fields. Higgs field gives mass to some particles but not to Higgs boson which is scalar in nature and has no spin (?) How can a particle exist without a spin? Higgs boson has a huge mass but Higgs field is not giving it that mass. Could we say then that Higgs boson doesn’t exist without a collision of hadrons and is a by-product of that collision and that a kinetic energy of that collision produces it.

Singularity being mathematical deduction of gravitational force’ capabilities, yes doesn’t belong in SM because SM cannot explain gravity with the known particles. I get that, I knew that. I just mentioned it anyways. Why can’t I accept SM? Because its based on the philosophy that everything in nature must balance out. So we have particles and antiparticles. This leads to an alternate realities theory, which to me is as unnatural as can be. Why do the things have to balance out? That leads to a conclusion that universe came out of zero energy? If that’s not unnatural, I don’t know what is. Next: Multiverse problem: where did the first universe come from? Big Bang theory: foggy start, gloomy ending; universe is on a ‘self-destruct’ course. Mankind has no future since we cannot leave this universe. Scouting the galaxy by manipulating the physical laws, creating wormholes is a short term solution if achievable. And then escaping out of the incinerating hug of our dying star or, getting wiped out by an asteroid or a comet or, by our own means. I think that universe is a much better place than what theory suggests. Yes, Its full of deadly radiation, but something placed us in a safe place. Now, that would be unnatural if nothing else exists but a physical process of evolution, (creation and destruction of matter), and we just happen to be an accident of this mindlessness. That to me would be highly unnatural. Otherwise, Standard Model doesn’t bother me at all. OH, I might have a wrong view of physicists as a whole, but you are not in that group, so I’m glad I stumbled on your blog.

Wishing you all a positively peaceful, relaxing, rejuvenating weekend!

Matt, you have an error in your text. There is at least one theory which haven’t that naturalness problem.

You haven’t read carefully. There are MANY theories that don’t have a naturalness problem, and I am going to explain the most famous ones soon. I will not explain those that are only believed in by one person, however.

Touché 🙂 I made the decision to take another route (in order to change the paradigm in particle physics), experiments it is. My last option so to speak. But blasting small enough amount of antimatter without high tech lab equipment is going to be *extremely* difficult. So wish me luck! 🙂

@Strassler The fact that things are cancelling out and the total energy is nearly flat is another way of saying that there is some unknown symmetry in nature! And what is that symmetry if it is not supersymmetry.

I would like draw you attention to a recent blog by Sean Carrol where he discusses the early results from dark matter searches which are giving hints of dark matter at 5-10 GeV energy levels. He pointed out that if that turns out correct, then there might be roughly equal number of baryons and dark matter particles which might have something to do with baryon number conservation. What kind of symmetry can give rise to equal number of heavier (but not that heavy) dark matter candidates. And other results point to interacting (Exciting) dark matter pointing to presence of dark electromagnetism like forces that do not interact with electric charge.

A symmetry is only one possible explanation. Dynamical effects can cause this also. I will discuss this soon.

Regarding dark matter: again, a symmetry is only one possible explanation. It could also be a dynamical effect.

As for dark forces and dark particles, see: http://arxiv.org/abs/hep-ph/0604261

I should have said no such thing as empty space, not vacuum, sorry.

When I said that there is no such thing as a vacuum, it was as a question, but in a sense doesn’t a field permeate all of space? At some time matter must have sprung from fields at some point in the creation of the universe? Right? Of course that would be the particles that later became Hydrogen and Helium, etc.. You can speak of virtual particles which spring in and out of existence evidently due to the peaks in waves that fields have, I would think. Anyway I’m looking at from the perspective of how I would visualize an ocean wave peak out and than disappear, yet the ocean still fills all space, though we only see the surface. Please excuse my less than knowledgeable questions.

Hi -I’m somewhat confused. You state Vmax is how far you could push the value of higgs field within the tolerance of the standard model, but that does not seem to imply the real field has to be high, in fact unless I misunderstand it is deemed to be 246 which is low and does not need any unnatural factors – so why do you need to push the foundations of the model that far?

The point here is not to put the cart before the horse. We have *measured* the Higgs field to be 246 GeV. But to show that the theory *predicts* this, we need to show that 246 GeV is actually a minimum of the energy. To prove this we must do a general calculation that doesn’t first assume that the Higgs field is low. Otherwise we’d be in danger of assuming what we were trying to prove.

But don’t worry; if we *had* assumed that the Higgs field’s value was small, but that the Standard Model was valid up to vmax >> 500 GeV, then we would simply have discovered, by calculation, that our assumption was wrong… and that there is indeed no minimum in the low-Higgs-field region at all.

Yes – but even according to your text 246 does not have to be the absolute but just the regional minimum so why strain a good model to breaking point?

Hmm. Not sure I understand you yet. We want to understand whether the good model could be the complete model or not. If it is the complete model, then the thought experiment of pushing up the Higgs field to large values should be legitimate. And then we discover that if it *is* the complete model, it actually isn’t that good, because there won’t be a minimum in the energy anywhere near 246 GeV. Which leads us to think it can only a good model up to around 500-1000 GeV, and then we should expect other phenomena to start showing up, or maybe something else weird is going on.

Sorry seems Im the one who does not understand but not sure where I go wrong:

There is one higgsfield at 246GeV – correct?

Because its there, there has to be minimum in the energy density at this level – ?

We dont know why but we dont need any unnatural factors to make it so?

If the field were >>500GeV we would need unnatural factors – but it is’nt – why does the standard model have to hold for for hypothetical fields that do not/ or may not exist – as an analogy its like designing a car that can go 500mph but knowing you can never exceed 60mph on the highway

Ah — do not confuse the Higgs field’s actual value with v_max. v_max is as large as we *COULD* take the field and still trust our equations. The issue isn’t whether the Higgs field’s value is >> 500 GeV ; it’s whether the equations would correctly describe the world if the Higgs field’s value were that big.

Say it this way: Suppose you know a car can go at 500 mph, but you discover the car is going at 60 mph. Now you want to explain: why is at 60 mph, given that it can go 500 mph? One way to find out is: try running the car (maybe just in your mind) at 200 mph, at 300 mph, at 400 mph, at 20 mph, at 30 mph. Maybe you discover that the engine’s force and friction on the wheels exactly balance at 60 mph: if you try to run the car at 20 mph, it will speed up; but if you try to run it at 300 mph, it will slow down; and right at 60, it will coast.

But now imagine, for example, that you had a car that could go 500 mph, and had an engine that was powerful enough to go that fast in the absence of friction. Wouldn’t you be surprised if it turned out that the engine and friction balanced when the car was going 0.0001 mph? Our problem is vaguely akin to this.

Thanks for your questions. You and several other commenters are finding a number of pedagogical flaws. I’m going to have to do a serious rethink of to reword some of this article.

Thanks for your patience,

Okay in the case of the car model I have to assume there is a hidden direct correlation between the car’s speed and the friction, and I suppose this is what you show in diagram 7, but if that correlation becomes known, would it not become natural?.

From diagram 5 it would however appear you dont need absolute cancelation as long as the curve beyond 500 does not dip lower than the point at 246, so if you have a field that increases exponentially as the first of the blue graphs (would be interesting to know what field that represents) and it were considerably stronger than any other field one could postulate that no more potential lowpoints lie beyond 500+, wouldnt that be a more “natural” assumption?

It isn’t just an issue of whether the correlation is known; the issue is whether it is a pure accident, or whether there’s a reason.

As for the second paragraph’s suggestion: if that were the case, then, if there were no accidental cancellations, then the minimum of the curve would be at zero, and thus the Higgs field’s average value would be zero and the particle’s mass would be large — in short, you’d have a world in class 2. Not class 3.

Going through the comments I think I was asking the same as JonW in a somewhat naiver way and thanks to your explanations to me and also to him I think I now finally get the picture (more or less). When I say correlation I mean with an underlying but unknown reason because random would be unnatural and I guess thats what youre saying too – I suppose somewhat a long shot but if particles are produced in pairs, maybe universes are also and then could we not possibly imagine a corresponding “anti-universe” with all fields reversed – if such two opposing universes were in the process of still separating or possibly colliding then could this lead to an overall field cancellation and appear locally similar to the situation that we experience now?

You could imagine that, but now make equations that actually do it. That’s the hard part. Then, having succeeded, make a prediction based on those equations. That, too, may be difficult.

The maths to do that is beyond me, but I would predict that a mixture of opposing universes would not be very stable, at least not for a sufficiently long time to get to the present stage

One thing I’m not sure I understand is how the Higgs particle gets mass. It seems logical that changing the Higgs field value increases the energy of empty space and that makes it more difficult to vibrate the field. However wouldn’t the same thing work for any other particle (electron, up quark, etc)? For the other particles the mechanism of getting mass is through interaction with the Higgs field. Not because changing the electron field value would increase the energy of empty space. Is that correct? Because my understanding is that if the Higgs field were zero, the other particles would be massless – so the zero point energy would not help them get their mass.

Or the zero point energy would give them some very small mass even without the Higgs field? And the Higgs boson does not need any other non-zero field to interact with, because for the Higgs it’s enough to use the zero point energy to get its mass?

Very good question. There is a pedagogical flaw here, and you’ve identified it. I have to think about whether I can improve this. Both your old impression *and* what I’ve said are correct, but I agree the relationship between them isn’t well-explained.

For all fields that we know so far (and this may not be true of other fields currently unknown), the mass of the particle is associated with the Higgs field in some way. However, the story for the Higgs particle is slightly different from the others.

About the Fields: The electron field’s average value is zero; this is true of any fermion. The W field’s average value is zero; calculation shows that the minimum energy of empty space arises when the W field is zero on average. Indeed, the Higgs field is the only (known) field for which there’s something complicated to calculate in order to determine whether the energy of empty space prefers it to be zero or not.

About the Particles: This is the same, in a sense, for the Higgs and for everything else. It is true that particles like the electron get their mass by interacting with the Higgs field. But how does that work in detail? An electron is a ripple in the electron field. That means that the electron field, which is normally zero in empty space, is non-zero as the electron goes by. But where the electron field is non-zero, it interacts with the Higgs field, which also isn’t zero even on average; and the interaction of the two increases the energy in that region. Consequently the electron field’s ripple has more energy than it would have in the absence of this interaction, and the excess energy is mass-energy, crudely speaking. This is general. The issue is: by how much does turning on a field like the electron field increase the energy of empty space? The amount by which it increases tells you something about the mass-energy of a ripple in that field.

The crucial thing that’s different about the Higgs field and particle is that the fact that the Higgs field affects so many other fields and their particles means there’s a complex interplay between the Higgs field’s value, the Higgs particle’s mass, and the energy of the quantum fluctuations of the other fields and how they depend on the Higgs field’s value. The description I gave above of how the Higgs particle’s mass comes about is not unique to the Higgs, but its relation to this complex curve, which comes from the quantum fluctuations of other fields, is special.

Thank you very much for the explanation.

Regarding “the Higgs field is the only (known) field for which there’s something complicated to calculate in order to determine whether the energy of empty space prefers it to be zero or not”:

I’ve read that in the theory of the strong interaction (QCD) the vacuum has interesting structure, too. In particular that there are quark and gluon condensates characterizing the vacuum. Wouldn’t these also be due to fields that prefer to be non-zero in empty space?

Good point. I should have said: “elementary field”. Quark and gluon fields do not have any such issue, but composite fields made from a combination of quark and anti-quark fields, and from pairs of gluon fields, have this issue. I will get into this subject when discussing “solutions”.

You are really a true scientist , honest , sincere and wonderful person Matt.

All respects and regards are due to you for your most respectful science.

Thanks

I mean by un-natural concept of field that reality is built on other kind of primaries .

I think you are mixing two definitions of natural.

The spectacular cancellation between known and unknown large quantities is what is unnatural. It is not concepts that are unnatural here.

The point is: even if we replace fields with something else, that can only change the UNKNOWN large quantities. The known large quantities will still be there… because we know quantum fluctuations of known fields exist and have large energy. That part isn’t dependent on the field concept.

I mean : maybe the un-natural is the field concept despite the correlations between the data and the assumption.

Remember “unnatural” means “non-generic”. What’s a “generic” concept?

Is the un-naturalness problem totally dependent on our assumption that the primary cosmic fundamentals are Fields so that if in the year 2100 it was proved wrong assumption then the U-N problem vanish , or it is itself some kind of fundamental rank problem , in other words , are ANY fundamental building blocks tied to un-naturalness ?

It’s hard to imagine that simply replacement of fields by something else would eliminate the problem, because we know field theory (and its quantum fluctuations) do a remarkably good job of explaining particle physics on distance scales from macroscopic down to 10^(-18) meters, and the naturalness problem arises already at 10^(-18) meters. Just as Einstein’s new theory of gravity didn’t make it necessary to fix all predictions of Newton’s theory (i.e., bridges didn’t fall down just because gravity is more complicated than Newton thought), a new theory of nature isn’t likely to eliminate the naturalness problem — unless, of course, the new theory changes the Standard Model at the energy of 500-1000 GeV (distance of 10^(-18) meters) or so. (Remember that there’s no naturalness problem with the Standard Model if vmax is in ths range.) However, if fields were replaced with something else in the 500-2000 GeV range, we would have expected predictions from quantum field theory for physics at the Large Hadron Collider to begin to fail at the highest energies. Instead, those predictions work very well.

In the blue,red and green curves, I suppose you are assuming that there is only one Higgs field and one Higgs particle at the currently known values. Does having more Higgses at higher and higher energies help with the fine tuning problem or make it worse? In other words are more Higges buried in high value of v (max)?

Actually, if there were, say, two Higgs fields, the only thing that would change is that instead of my curves (functions of one variable), I’d have to draw functions of two variables. The argument would be entirely unchanged; adding more Higgs fields does not give any natural cancellations, and the problem would now be just as bad.