Yesterday I spent the afternoon at the Third Indian-Israeli International Meeting on String Theory, held at the Israel Institute for Advanced Studies. The subject of the meeting is “Holography and its Applications”. No, this isn’t holography as in that optical trick that allows you to create a three-dimensional image on the security strip of your credit card — this is “holography” as string theorists like to discuss it, that trick of describing gravitational or string-theoretic physics in a certain number of spatial dimensions as quantum field theory (without gravity) in a smaller number of spatial dimensions. It’s impressive, even stunning, that sometimes you can use a precise form of the holographic principle to solve some difficult string theory problems by rewriting them as easier quantum field theory problems, and solve some difficult quantum field theory problems by rewriting them as easier string theory problems.

I worked in this research area on and off for quite a while (mainly 1999-2007) so I know most of the participants in this subfield. In fact my most commonly cited paper happens to be on this subject. But ironically my role at this conference was to present, as the opening talk, a review of 2011 at the Large Hadron Collider (LHC).

My talk started with an extended introduction, a sort of “everything you absolutely have to know if you want to understand what’s happening at the LHC.” Most string theorists know a lot of particle physics, and most are aware of what is happening at the LHC, but most of them don’t know all the details. [Well, after yesterday, some of them know a lot more details than before, maybe more than they wanted.] Then I talked about some of the most basic things we learned in 2011 from the LHC:

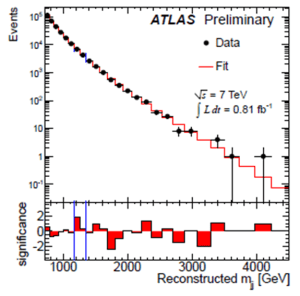

- quantum field theory (the mathematics used in particle physics) in general, and specifically quantum chromodynamics (the mathematics used to predict the behavior of quarks and gluons), still work just fine, as far up in energy as the LHC’s data can currently reach, roughly 3 TeV or so (see Figure 1);

- if there are new phenomena occurring at the LHC, they most likely are not produced with very large rates, or they involve particles that decay in a complex fashion, one that does not easily stand out in the most common searches;

- the most popular forms of supersymmetry and some other similar theories (which have high production rates and would generally stand out) are increasingly disfavored, though other more tricky variants still remain largely unconstrained;

- partner particles for the top quark, widely expected in many models that attempt to address the hierarchy problem, haven’t been observed (see Figure 2), though the searches are hard and haven’t gotten that far yet. There’s a lot more work to do over the coming years — especially in the case of supersymmetric partners (top squarks, as they are called) which are particularly tough;

- and a few other things…

And finally, I talked about the search for the Higgs particle, focusing on the recent measurements at the ATLAS and CMS experiments aimed at Higgs decays to two photons and to two lepton-antilepton pairs, explaining (as I did here) that the data needs to be interpreted with care, and why I think the jury is still out. I reminded them that humans are terrific at seeing patterns — even when looking at random data with no actual patterns in it. One always needs to apply a discount for this psychological tendency.

I entitled my talk “Juggernaut and Behemoth”, two words that seem appropriate in describing the LHC, and that have entered the English language (mispronounced, of course) from the long and great traditions of the two cultures most closely associated with the conference, the Hindu and the Hebrew. Juggernaut, which in English conveys a notion of something powerful and unstoppable, is a rough translation of Jaggannath, a Hindi word meaning “Lord of the Universe” and referring to the god Vishnu. Behemoth, which in English implies a huge creature, is a monster described in the book of Job (from the Old Testament, i.e. Jewish, portion of the Bible). In bringing these images to mind, I intended to suggest not only the physical size and scale of the LHC but also the daunting amount of data, of information, and of knowledge that it generates, and the immense importance of its scientific research program, both in informing our understanding of the basic workings of our universe and in determining the future of particle physics as an intellectual endeavor. In many respects, the LHC borders on overwhelming.

Unfortunately I couldn’t go back to the meeting today, because this morning I had the honor to give the weekly colloquium at the Weizmann Institute, my hosts during this short visit. When I first wrote a colloquium talk on the LHC, back in 2007, I could give it several times in a row before it would go out of date. But nowadays, things change so quickly that I have to update the colloquium for each presentation… especially the section on the Higgs particle!

Expectations are that we will get a little more news on the Higgs searches pretty soon — within a week or so.

4 Responses

Quality and quantity. My wife worked on CHARISMA, a unique resonant ionization spectrometer at Argonne Nat’l Labs, and they fought tooth-and-nail for just a few quality measurements, sometimes spending weeks for just a couple readings. My graduate work saw the flipside — straightforward measurements, but gobs and gobs and gobs of data.

I imagine working at LHC is like both of those combined times like, a million.

— Tim

scienceforfiction.com

I always like it to read such articles that contain in addition to awfully well explained physics some personal reports about the life of a theoretical physicist.

And I look forward to next week 🙂

Cheers

Hi Matt — I wish I could’ve been a fly on the wall at your presentation. Any possibility of posting it or emailing a copy? Regardless, this post made me wonder about two things in particular, speaking mostly to my naivete:

1) How is 11-dimensional M-theory condensed into 2-dimensional holography? (Just reading this question, you’re probably sighing out loud; feel free not to worry about it, or maybe suggest some references I could read) I tried to tackle “The Holographic Universe” at http://wp.me/p1SONx-37

2) What would be a major benefit — say, technological advance, data analysis technique, additional measurement, or increase in precision — that would advance the analysis of all that data? That is, how can we overcome this hurdle of information overload? Any “futuristic” remedies? A-I? Quantum computing? Advances in visualization and data manipulation? I’d be very interested in your thoughts on handling massive amounts of data, and some of the newer visualization techniques out there, like http://wp.me/p1SONx-3l

The metaphors were great though. Did the audience appreciate them, in terms of describing the nature of the LHC and the data it’s producing?

Thanks! Always enjoy your posts.

1) Tim, holography is a tough one to do quickly, if you want it done right. It’s a lot harder than extra dimensions, and that took a sequence of six articles (and I’m not done yet.) I will do holography eventually but it is not so easy to figure out how to present it, and there are clearly some prerequisites to come first. It will probably not be this year (too much LHC stuff.)

2) Gosh. I am sure there would be some real uses for extreme computing, though the really hard part about LHC data is actually not just the volume but the complexity of it. It takes a dense and tricky mix of humans and computers to deal with it. Clearly significant increases in computer power would help remove time delays — reprocessing such a huge data set to make some improvements takes months — and truly intelligent artificial intelligence could do a lot of work for us. And computer systems that allow humans to interact very efficiently with computers and data — including being able to rapidly manipulate 3-dimensional or even 4-dimensional plots — might allow some real insights. But at the LHC there is an enormous amount that humans will continue to have to do, because in a machine this complex there is always a device somewhere that is generating electronic noise, or effects from the beam being a little jittery, or something else, and you need quite an intelligent mind to make the right decisions about how to handle such things.

I will try to remember to post the talk, or at least the parts of it that could be comprehended. The talk seemed to be well-received, probably more for its pedagogical and scientific content than its over-arching metaphors, but I think some people, at least, were amused.