Matt Strassler [April 2, 2012]

We now have many details of what went wrong at OPERA, the experiment which produced an anomalous result showing neutrinos arriving earlier than expected, widely interpreted in the press as a violation of Einstein’s dictum that nothing can go faster than the universal speed limit, the speed at which light travels. No one in the scientific community is surprised that the result was due to an error of some type. Most scientists, including myself, weighed the chances of the experiment being correct as extremely small; though most people kept an open mind, I don’t recall ever having a conversation with a serious scientist who thought it was likely to be correct. The main reason was that it was very difficult to imagine any way that it could be correct and yet be consistent with many other classes of experiments that confirmed Einstein’s equations for relativity, or even be self-consistent; see for example this article, and this one.

But the process of finding a mistake in an experiment is itself often an interesting and instructive story. And this one is no exception. In this article, I’ll walk you through the process by which OPERA found, diagnosed, and removed their mistakes.

[I would like to thank commenters Eric Shumard, Titus and A.K. for their help in making this article much better than it otherwise would have been.]

First, let me put the obvious disclaimer: at the moment of writing, my information is partial. I’ll try to indicate where I don’t know things.

Second, let me say that I will withhold judgment (until the end) as to the human elements of OPERA. Let’s start by just looking dispassionately at the information.

Discovery and Diagnosis of Potential Problems

The best place to start is with a slide from a talk by Maximiliano Sioli, from Bologna, given on behalf of OPERA, at the March 28th mini-workshop on neutrino timing held at the Gran Sasso laboratory, where OPERA is based. [Note that OPERA is not, despite reports in the press, a CERN experiment; CERN only provides the neutrinos. All the known mistakes made at OPERA were in equipment installed inside OPERA’s location at Gran Sasso, well outside CERN’s purview.] This slide, shown in Figure 1, gives the full timeline.

What you learn from this is that between September and November, after the original OPERA result (what I call OPERA-1) was released publicly and the media frenzy began, OPERA personnel did what they should do: they made many studies of their work to see if they could find mistakes. But their focus was on higher-level questions: had they done the data analysis correctly? Had they missed anything in their calculations involving special and general relativity such that their expectation for the neutrino arrival time was off? [There is also a statement about “Delayed double cosmic muon events”; this is an attempt to cross-check the result, described in the first comment below, but I’ll skip this because it is in the end irrelevant.] Apparently the lower-level questions — is every wire functioning? have we double-checked every single part of the experiment? — were not at the top of the agenda. That may be because they got many questions from the outside world after their initial presentation, and outsiders would only be able to suggest higher-level problems to check; no one from outside would have ever been able to suggest that perhaps they hadn’t screwed an optical fiber in correctly!

Then came what I call OPERA-2 (which OPERA calls “bunched beam”, or “BB”) where they measured the neutrino speeds in a different way, using very short neutrino pulses. As I explained here, OPERA-2 is a much better way to do the measurement; I would largely disregard the original OPERA-1 and focus on OPERA-2, in my own thinking, because it is so much clearer how to think about the result. The fact that the results from OPERA-1 and OPERA-2 together (the two red numbers marked δt in Figure 1) agreed, to within the measured uncertainties, indicated that the data analysis techniques were not at fault, and also made it unlikely that any intermittent equipment problem was responsible.

Only in December did they start to look at the equipment that actually allowed them to carry out the timing measurement. I don’t know why. One might have thought this was the first priority, but perhaps some people within OPERA must have felt that any problem in the timing would have shown up through some other cross-check that they’d already made? Or perhaps this was one part of the experiment in which they were particularly confident (apparently overconfident)? This is one of the main puzzles at the time of writing.

Right away, a major problem showed up. On December 6 – 8, measurements took place of the time interval between

- the moment when a signal (a laser pulse) is sent from the lab’s GPS timing equipment somewhere on the earth’s surface, across 8.3 kilometers down into the underground lab and to OPERA itself, where the laser pulse is converted (in a special device, which I’ll just call the box) to an electronic signal for use by the OPERA Master Clock, and

- the moment when the Master Clock sends a timing pulse to synchronize all of OPERA’s many computers and other devices.

The problem is shown in Figure 2, taken from a slide by G. Sirri, also of OPERA and from Bologna. Most disturbing is that this way of measuring the timing had not been repeated since 2007! (Why not?! This is another major puzzle at the time of writing. Presumably they checked the timing using some other methods which for some reason didn’t reveal the problem…?)

- The measurements in 2006 and 2007 showed a time interval of about 41000 nanoseconds [billionths of a second], but

- The measurement on December 6-8, 2011 showed a time interval of about 41075 nanoseconds.

This change was of the right type to potentially cause an apparent early arrival time for the neutrinos, and of roughly the right size to explain OPERA’s measurement. This must have generated immediate and considerable alarm among those within OPERA who made the neutrino speed measurement. But the discovery of a potential problem is not the same as the unambiguous determination of a problem, so investigation continued.

Sometime over the next few days, efforts to track down the problem led people to discover that the fiber carrying the laser pulse to OPERA’s converter had not been screwed correctly into the box. This is shown in Figure 3. Despite reports in the press, this is not a “loose wire”. A copper wire that isn’t tightly connected to an electrical lead can cause an electrical device to behave erratically, because electrical current will sometimes flow and sometimes not. But the optical fiber isn’t what most people think of as a wire — it carries light, not electrical current; and it wasn’t loose, it just wasn’t screwed in all the way. That’s relevant, as we’ll see.

Those within OPERA who were studying this problem found was that when they screwed the optical fiber in tightly, the time interval went right back to 41000 seconds (Figure 2 again). So they knew then, on December 13th — ironically, on the same day that there was all the excitement about the search for the Higgs particle at the Large Hadron Collider over at CERN 730 kilometers away — that the fiber not being screwed in right had the potential to shift their results towards an early-arrival time for neutrinos by many tens of nanoseconds.

But at around the same time (when? I’m not sure…) another problem appeared. They detected some kind of timing drift. For technical reasons, OPERA took data in 0.6 second chunks, and cross-checks of measurements suggested that the timing at the end of a chunk was not calibrated properly relative to the beginning of a chunk. So this added confusion to the situation. The drift would also have affected their measurements, though possibly in the other direction, causing neutrinos to apparently arrive later.

So they had two problems, a shift from a fiber connector, and a drift from somewhere not yet identified, and the questions they had to answer in mid-December, closely paraphrasing Sioli’s talk at the mini-workshop, were

- What were the sources of the fiber time delay and of the drift effect?

- How long had these two problems been present? Long enough to affect the OPERA measurements?

Somewhere over the ensuing two months (including what must have been quite an unpleasant holiday) they managed to untangle the puzzle.

First, what was causing the two effects?

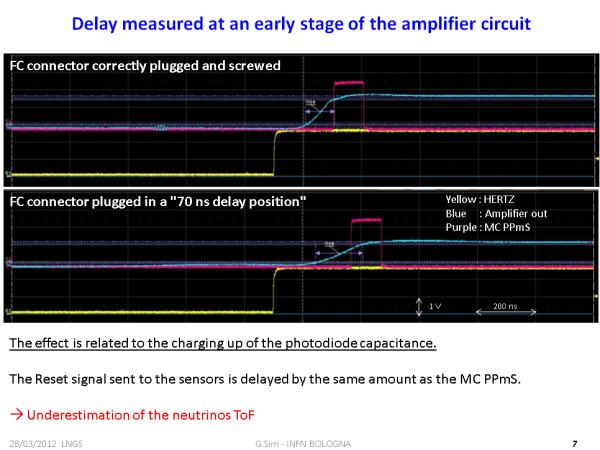

The reason an unscrewed fiber can cause a time delay is the following (and this was guessed almost precisely by Eric Shumard, one of the commenters on this site, shortly after OPERA’s problems became public.) It is shown in Figure 4. The timing system works by sending a laser pulse, of considerable length and high intensity, at prescribed intervals (once every thousandth of a second), down the fiber. The start of one of those pulses is shown in yellow at the top of Figure 4. That pulse then enters the box. Rapidly (but note Eric Shumard’s comment on this post), over about 100 nanoseconds, that pulse generates an electrical voltage (shown in blue) inside the box (I’m skipping some electronics details) and when the voltage reaches 5 Volts, it causes the Master Clock to register the timing pulse and fire off a signal (shown in pink-purple) to the rest of the OPERA experiment. But when the fiber isn’t screwed in right, an effect such as that shown at the bottom of Figure 4 results; not as much light as expected enters the box, and this slows the rate at which the electrical voltage builds up, delaying the point at which 5 Volts is reached, and therefore delaying the Master Clock timing pulse. The whole effect is several tens of nanoseconds, depending on how improperly the fiber is screwed in. This is the effect that generated OPERA’s apparently early neutrino arrivals.

What about the drift? It turns out the Master Clock itself was not properly calibrated. After it fired with the laser pulse, at the start of each 0.6 second data chunk, it then drifted slightly during the next 0.6 seconds, by a total of 74 nanoseconds. Then it would be re-synchronized 0.6 seconds later (albeit incorrectly, due to the improper fiber connection) by one of the laser pulses coming down the fiber. On average, its drift would have an effect of 37 nanoseconds, but it would be worse at some times and better at others during the 0.6 second chunk of data. This effect would make the neutrinos appear to arrive late, but turns out to be insufficient to cancel the effect of the fiber.

OPERA’s Scientifically Sound, Falsifiable Model for What Went Wrong

Now — and this is where it becomes a scientific story of its own — the OPERA folks, thinking like good scientists, developed a clear and precise hypothesis for what was going on. If these two problems were the full explanation for what was going wrong at OPERA, then they should have had the effect shown in Figure 6 on all of OPERA’s timing measurements — for neutrinos and for anything else that happened to pass through their experiment.

When the fiber was screwed in correctly, but the Master Clock was drifting, neutrinos (or anything else) that arrived at the start of the 0.6-second chunk of data should have been properly timed, but those that arrived later should have had timing off by an amount that grows linearly in time across the data chunk, reaching 74 nanoseconds apparent-late-arrival for those that arrive at the end of the 0.6-second data chunk, or an average of 37 nanoseconds late-arrival across the data chunk. This is shown as the green line.

When the fiber was not screwed in correctly, then the Master Clock drift would have had the same effect as before, but all of the timing should have been shifted earlier by 73 nanoseconds. That is shown as the red line.

Now they had a clear, falsifiable hypothesis to check. But how to check it?

Clearly it is essential to know how long the fiber had been improperly screwed in. They were apparently able to find a photograph of the apparatus from October 13th — I don’t know when they found that photo — which by chance shows (Figure 7) that indeed the fiber was screwed in wrong and apparently by the same amount as in the photo from December 8th (Figure 3). This fact alone invalidates the initial result from OPERA-2; the result cannot be trusted once this is known.

Unfortunately they could not find earlier photos to clarify if and when the fiber’s connection had been unscrewed during the previous years, when OPERA-1 was running. And to test their hypothesis, they really needed to know when this problem started.

How the Model was Tested

Lacking that, they managed to get the same information by performing a crucial check comparing timing at OPERA and at the nearby LVD experiment using cosmic-ray muons that pass through both detectors. [You should read that article now before continuing, if you haven’t already.] In Figure 2 of that article you will see that this cosmic-ray muon measurement revealed a 73ish nanosecond shift in the timing between OPERA and LVD that started in mid-2008 and ended when they screwed the fiber back in at the end of 2011. (Another puzzle: it is still not known why they didn’t consider and/or carry out this check before going public with their result last September.) Obviously that implies that the fiber was unscrewed and then not screwed back properly sometime in the middle of 2008, before OPERA-1 began. I will now show you that armed with that information, the OPERA folks could test the hypothesis shown in Figure 6 in detail.

First, using the method I described in the above-mentioned article, they compared the timing of the cosmic-ray muons that passed through both OPERA and LVD during the periods (before mid-2008 and after Dec 13th, 2011) when the fiber was screwed in properly (normal condition [NC]) and found the data (green dots in Figure 8) agreed with the prediction of their model, the green line in Figures 6 and 8.

Then, they studied the muons that arrived when the fiber was screwed in badly (anomalous condition [AC], from mid-2008 through December 13th, 2011) and they saw that those muons (red dots in Figure 8) are indeed shifted down to the red line predicted by their model.

Having confirmed the model using cosmic-ray muons, they had now shown somewhat convincingly that they understood what was wrong with their timing and when it was wrong. But as good scientists, they weren’t going to stop there.

What they did next was to check whether their neutrino measurements were consistent with what they’d learned, independently, from the cosmic-ray muons. They had to account for the fact that neutrinos in OPERA-1 and OPERA-2 were not evenly distributed across their 0.6 second chunks of data. For technical reasons having to do with how CERN delivers neutrinos in the direction of OPERA, these neutrinos tended to arrive on the early side of the chunk for OPERA-1, and on the very early side of the chunk for OPERA-2 . Based on this, they could now predict, given their model for the timing problems, what they should have measured for the arrival times of the neutrinos in OPERA-1 and OPERA-2; these predictions are shown as two red dots in Figure 6. The actual data giving 60 nanosecond early apparent arrival is shown in Figure 8: the black circle marked “Standard ν runs” for OPERA-1, and the open circle marked “Bunched ν runs” for OPERA-2, and their locations agree with the red dots in Figure 6.

You see that it is a bit of an unpleasant and unfortunate accident that both versions of OPERA came out with the same result. Had CERN’s neutrino beam spread OPERA-1’s neutrinos out more evenly across each data chunk, the time delay measured at OPERA-1 would have been perhaps half as big as that of OPERA-2. They, and we, would have known already in November that something was wrong. And moreover, if the problem with the fiber’s connection had occurred, say, between 2009 and 2010 instead of in 2008, in the middle of OPERA-1, that too would have made OPERA-1 different from OPERA-2; in fact the change would already have been noticed in OPERA-1’s data. And finally, it would appear that a statistical fluke made the two results a bit closer than they would have been expected to be, given this model for the problems; OPERA-2 lies just a little bit above the prediction, and OPERA-1 a little below it. So OPERA had two and a half strokes of very bad luck… not unusual when an experiment goes wrong.

But now, finally, their solid scientific detective work pays off, and they can walk off the stage with their heads as high as any experiment that’s been through a catastrophe possibly could. Because with their model working well (and they added another small check of the model which I’ve omitted for brevity), they can now work backwards and remove the effect of the improperly connected fiber, and of the clock drift, from the OPERA-2 measurement. They aren’t quite done with this yet, but they have a preliminary result, and it says that the difference between the corrected arrival times and the predicted arrival times is -1.7 nanoseconds with a statistical uncertainty of at least 3.7 nanoseconds, experimentally consistent with zero — though note this preliminary result contains an incomplete estimate of the uncertainties and so the overall uncertainty will go up in the final result. In short, OPERA-2’s neutrino arrival time is now apparently consistent with Einstein’s prediction, though they still have more checks to do before they can say this with the confidence they want. So when this revised result is complete and appears in a preprint document, it is very likely OPERA will agree with the ICARUS experiment’s result from a couple of weeks ago, though within OPERA they must be very frustrated indeed that ICARUS used OPERA’s hard work (in setting up the unprecendentedly accurate timing and distance measurements from CERN to the Gran Sasso lab) to beat them to the finish line.

A Few Personal Comments

Note added: I recommend reading Antonio Ereditato’s letter about his resignation as leader of OPERA; it gives his point of view in some detail, and puts some perspective on these comments.

The OPERA team, as a whole, has done what any good scientific team would do: they’ve worked hard to make the best possible analysis of their experiment, and fix the problems they’ve found using a careful, clever, creative, and scientifically convincing technique. For this they deserve kudos and respect.

But that said, their speed measurement crashed and burned in a very distressing fashion. Any good experimentalist will tell you that when you think you might have made a radical discovery, one thing you need to do is make a list of all the elements of your experimental apparatus that could affect your measurement and check them all, every single one, in multiple ways. Clearly, you check all the wires and connectors in more than one way, one by one, especially those on which the whole experiment depends, such as those going into and out of the experiment’s master clock. And if your measurement is based partly on a precision timing measurement, you want to have made abundant checks that you don’t have a problem in your timing equipment. It is more than a little mystifying that OPERA’s neutrino-speed experts did not have a complete yearly check of their timing calibration, or at least a final thorough check after OPERA-1 was over before announcing a result. How could a big problem that arose in 2008 go undetected until the end of 2011, after the experimental result was announced? Maybe there was a good reason that isn’t obvious, but right now the OPERA folks who were in charge of this part of the experiment (and I remind you that the neutrino speed measurement was a small part of the OPERA program, so most of the OPERA people were not directly involved) have some serious explaining to do.

There is also the question of why the discovery of a problem in mid-December was not revealed (possibly under pressure from some direction, though the details remain murky) until mid-February. I do see one reason why there may have been such a delay. [However, the statement from Eriditato suggests that the OPERA/LVD collaboration only occurred quite recently, and casts doubt on the rest of this comment; instead it suggests that there was some other internal concern until February, but it isn’t clear what it was.] Perhaps not everyone in OPERA was convinced that the fiber and the clock were the source of the main mistakes — after all, there was a complicated combination of a shift and a drift in opposite directions — and as we’ve seen, a crucial way to check the story’s consistency was to carry out the comparison of the timing at OPERA and at LVD using the cosmic ray muons that enter both experiments. There are only about a dozen of these muons per month, so in order to gather enough of a statistical sample after the fiber was properly screwed in so that they could be sure the problems had been fixed, they needed to take data for much more than just a couple of weeks.

Nevertheless, while it is not unethical to want to study a problem in detail before talking about it — it reflects wanting not to create additional confusion by saying something that itself turns out to be wrong, and also reflects wanting to preserve one’s reputation by briefly (!) not revealing a mistake until it can be fully diagnosed and corrected for — in this particular case questions have to be raised, because quite a few other scientists were working hard on future efforts to confirm or refute OPERA’s measurement. The fact that internal questions about OPERA’s results were not revealed for two months may have wasted the time and money of a number of scientists who would have chosen to do something else had they known about this. In my mind this may have crossed a line — not a line of professional ethics, perhaps, but certainly a line of professional judgment. Of course, there may be issues that the OPERA people were dealing with of which I am not aware. But I think we are owed an explanation, even if it is an embarrassing one for some individuals.

Overall, this story currently appears to be a classic case of how not to handle a possibly sensational result. What are the lessons?

- You do not go full-speed ahead with a big public presentation and a press conference if you haven’t, in fact, done all the basic internal checks of your equipment.

- You don’t say “we have a timing anomaly (oh, and by the way, if it is not a mistake you can interpret it as a violation of Einstein’s relativity)” because the only thing people outside physics will hear is “violation of Einstein’s relativity”; you have to bend way over backwards to call it a “timing anomaly” again and again and again, much louder and more assertively than the OPERA people managed to do it.

- Instead of having a big public talk at CERN (thereby dragging other people into your mess) when you announce your result, you should announce the anomaly quietly, at a little mini-workshop, and save your big public talk (and any damage to your reputation, and everyone else’s, the guilty and the innocent) until you confirm it with a second measurement. [Granted, at OPERA that second measurement probably would have been OPERA-2, which did in fact confirm OPERA-1, so in this case we would still have had some of the same hullabaloo; but it would have been much easier to forgive the OPERA leadership in that case.]

- Or if you do decide to have a big public talk when you announce your initial result, you don’t then reveal that you’ve found and fixed your errors at a quiet little mini-workshop at Gran Sasso. That’s like newspapers printing the corrections to their erroneous front-page stories at the bottom of page seven (which happened on a regular basis amid the often dreadful mainstream-media reporting on this story.) I hope there will at least be a big public talk when the updated OPERA-2 result becomes official.

Scientific mistakes are forgivable; at the forefront of knowledge, where new techniques are being tried out for the first time, mistakes are going to happen. Some mistakes are worse than others, but even bad ones are going to happen to good people sometimes. The issue here is not the scientific errors themselves, but the bad judgment about how to handle a potentially sensational but probably wrong scientific result. It is impossible to say whether or not the leaders of OPERA, who damaged their own reputations — along with those of their experiment and their collaborators, of a couple of supporting laboratories, of the research field of particle physics and perhaps of science as a whole — will pay a long-term price. But for now, their resignations from their leadership positions in OPERA (though not from the OPERA experiment) do not seem inappropriate.

97 Responses

FiberCablesDirect’s team of industry professionals has a combined 20 years of experience in all areas of the cable and connectivity business.

Whoa ! Just like dark matter and dark energy there must be some dark force that keeps scientists from thighting the wire and rerunning the experiment. It’s been 3 years. Or maybe they are smarter then we think. Or maybe they think they are smarter.

Excellent,, After all I got a website from where I be able to genuinely obgtain useful information regarding my study and knowledge.

Ciekawe spojrzenie na sytuację, każdy winien przeczytać oraz zaznajomić się z

motywem.

Wow! After all I got a website from where I be able to genuinely obgtain useful information regarding my study and knowledge.

It is possible that the CERN experiment indicates that the accepted value of c, based as it is on measurements in the non-absolute vacuum of space, is too low? If our local space has a refractive index of > 1.000025 then the correct value of c would be sufficiently large for the neutrinos not to be violating the laws of special relativity; though admittedly such an index is quite a large value.

Encore un poste réellement intéressant

The FTL neutrino can only gain time on light by the length of the pulse. That is because only the last neutrinos can only catch the first ones, never pass them.

Excellent

Oh, I thought you were referring to the browser!

Terrific work! This is the type of info that are meant to be shared across the internet.

Disgrace on the seek engines for now not positioning this

publish upper! Come on over and seek advice from my web site

. Thank you =)

Let the fiber optic cable allegedly attached improperly caused faster-than-light measurements about 75 ns, and a clock oscillator ticking too fast which caused average of 37 nanoseconds late-arrival. It means that the two flaws could solve the discrepancy only at the 37 ns level. The question how to manage the other 38 ns discrepancy is still open.

Here is explanation what really in OPERA experiment happened:

http://gsjournal.net/Science-Journals-Papers/Subjects/Relativity%20Theory

Dear Matt, I would like to know where the remaining 37 ns disapears, please?

It would be useful to know from what date the CERN-OPERA uses for clocks synchronization single GPS satellite, and which of two possibilities prefers: – either the satellite flying (almost) parallel, or (almost) perpendicular to the line CERN – Gran Sasso.

I think that you have a very opinionated article here. You seem to be very judgemental on the lead team at OPERA. I understand that they should have carried out further calibration experiments, but they have some of the most up to date equipment in the world and they are the experts in their field. They simply found a curious result and after months of trying to determine a cause of error for their results they were forced to publish it for scrutiny. That is the whole idea of peer review!! It is not the fault of the team that the media picked up on the results and falsely claimed a break through in modern physics. The team then efficiently worked through the apparatus and identified the source of error. Yes, it may have cause a commotion, but they did their job, carrying out an experiment and seeking the reliability and validity of the data they collected!

Now hold on…Surely someone else will notice that this explanation is “lacking”. The 70 ns fiber delay

is repeatable on top of a much much larger delay. This total delay WAS measured and accounted for and

would not, in itself cause the error. You could have ANY arbitrary consistent delay here and it shouldn’t have mattered

The “jitter” might make the data “noisier”, but would not in itself cause the problem either. Neither would both taken together. The true problem wasn’t either of these.

If this “fixed” the problem, then the problem wasn’t the delay per se. What it was was that someone

didn’t measure the delay and jitter properly in the first place and instead just inserted factory specs into the

time for the delay chain.

Your explanation looks good, but your problem is that you automatically associate changes in Time delay (Fig.2) with incorrectly (correctly) plugged FC. There is a possibility that the culprit could be found on the stage “Satellite – GPS receiver”. The common view synchronization method using single satellite can (by accident) be performed by satellite moving parallel or perpendicular regard the abscissa CERN-Gran Sasso. If in 2006 and after December13, 2011 the synchronization act was performed by satellite moving perpendicularly, and during Ereditato and Autiero era the satellite moved parallel, then I can show, that the connector has no influence on the issue.

The assumption is that time is discrete, not continuous. If so;

Particles with mass go 10**20 times C, if they go at all , but for a very short distance.

Only the later neutrinos went faster for 20 meters as the first had to change space in any burst.

Particles with out mass can go 90% of the distance between galaxcies at that speed.

Gravitons with this range explain ALL dark energy. And include such other events as

Flybys, Pioneer Anomaly, all warped galaxies, Stability of Bar galaxies., etc.

The devil hides in the details. Why did neutrinos in OPERA-1 and OPERA-2 were not evenly distributed across their 0.6 second chunks of data?

There are big mistakes in design of experiments to detect the superluminal neutrinos. “In the LNGS experiments (May 2012), the 17-GeV muon neutrino beam consisted of 4 batches per extraction separated by about 300 ns, and the batches consisted of 16 bunches separated by about 100 ns, with a bunch width of about 2 ns.”

ONLY neutrinos from the weak decays INSIDE the strong fields in baryons are superluminal. In low-energy regime of the neutrinos, almost all neutrinos are moving with the speed c because they are produced outside the strong fields whereas in very high-energy regime almost all neutrinos are superluminal and have speeds from 1.000000001c to 1.000000002c. The central value is 1.0000000014c. Such case concerns, for example, the supernova SN 1987A. The accuracy of the measurements in the LNGS experiments (May 2012) was too low to detect superluminal neutrinos moving with speed 1.000000002c or lower.

Lifetimes of the particles which decay due to the weak interactions inside the strong fields are as follows:

2.2•10^-6 s for the muons and then their maximum speed is 1.000071c,

2.5•10^-7 s for the relativistic pions and then their maximum speed is 1.0000239c,

1.7•10^-15 s for the W bosons and then their maximum speed is 1.000000002c.

For the 17-GeV, most frequently the superluminal neutrinos appear about 250 ns from the beginning of collisions of the nucleons (it is for the lifetime 2.5•10^-7 s). But mostly the neutrinos are moving with the speed c. This means that the pulse profile in the LNGS experiments is incorrect. We should separate the neutrinos emitted about 250 ns from the beginning of a collision of the nucleons (in the OPERA or ICARUS experiment, width of a bunch should be much lower than 60 ns) and detect WHOLE pulse reaching the Gran Sasso. Moreover, we should detect big number of neutrinos because only not numerous neutrinos are superluminal.

Thanks; you DID follow up ! Almost every one does not.

If a bad connection caused the problem , then put the cable in the same way, “bad” and see if the same “bad” results come back. Thanks again….. Oh I have a paper that states that neutrinos DO go FTL. So you see I am on the other side of this story. Richard Bauman

Oh, ok. Well, you’re out of luck; four experiments have already shown that your theory is wrong. http://profmattstrassler.com/2012/06/08/end-of-the-opera-story/ And as for putting in the cable the “same” way, that’s not possible when you have an imperfect connection. However, they did show that the bad cable leads to the observed time delay. And they also proved that the cable was out of alignment by the right amount to explain their FTL neutrino result by combining their data with that from the LVD experiment. http://profmattstrassler.com/2012/03/30/operas-timing-issue-confirmed/

Any good scientist accepts the verdict of experiment. You have your chance now. Fail, and lose your reputation permanently.

1) Rather die without reputation than die ignorant.

2) I accept all experiments for what they are.

3) The neutrino goes 10**20 faster than light for the first 20 meters.

4) Our difference stems from; you believe (if I may) time is continuous and for me it is discrete.

There is a paper at the Niels Bohr Inst., entitled “Time and its Properties” which states this and

and the unit of time used in the prove is called a “dick” much like tick, tick, tick. Thank you.

So how many times did THEY put the cable back in wrong and rerun to prove YOU are right ?

Your question doesn’t make logical sense; please read it carefully, and ask again more clearly.

This is a big joke in 21st century!!!!

“…no one from outside would have ever been able to suggest that perhaps they hadn’t screwed an optical fiber in correctly!” Not likely, I agree, but some decades back, an oscillation was seen in the pion decay to muons signal at LAMPF. The exciting thought was that this was some kind of reflection of neutrino oscillations which were just beginning to be taken seriously by experimenters. After hearing from theorists that this did not seem at all possible, Dave Bowman (on the experiment) separated two signal cable runs and the effect went away. Ever since, anyone associated with Los Alamos at that time has always asked about such ‘trivial’ equipment questions as connections and cable arrangements. But as you say, only the people working on the equipment could know precisely where to look. Thanx for your most interesting ‘conversation’.

OPERA, ICARUS, LVD, BOREXINO presented preliminary results of the new neutrino speed measurements in May 2012, consistent with the speed of light:

http://francisthemulenews.wordpress.com/2012/06/08/la-medida-correcta-de-la-velocidad-de-los-neutrinos-de-opera-en-2011-y-los-nuevos-resultados-de-2012/ (Blog in Spanish)

Borexino: δt = 2.7 ± 1.2 (stat) ± 3(sys) ns

ICARUS: δt = 5.1 ± 1.1(stat) ± 5.5(sys) ns

LVD: δt = 2.9 ± 0.6(stat) ± 3(sys) ns

OPERA: δt = 1.6 ± 1.1(stat) [+ 6.1, -3.7](sys) ns

OPERA has also revised their 2011 results and will resubmit it to the “Journal of High Energy Physics”:

δt = (6.5 ± 7.4 (stat.)+9.2 (sys.)) ns

Also MINOS at Fermilab corrected their former results

δt = −11.4 ± 11.2 (stat) ± 29 (syst) ns (68% C.L)

See also the updated CERN press release

http://press.web.cern.ch/press/PressReleases/Releases2011/PR19.11E.html

Thanks, Titus, for those details.

I believe the reason why they did not check the hardware before December is that they had to wait untill Cern’s winter shutdown. After all OPERA’s main goal was still to measure neutrino oscillations and they could probably not start touching the setup while data accumulation was ongoing for the primary experiment.

You can wonder why they decided to publish before all that was done and it seems obvious from the response all this story got that they communicated badly on what they still had to check when they made their announcement. In an aside conference an OPERA member gave where I’m studying, he indeed pointed out that they had to recheck every experimental detail in the time to come, so I did not take the announcement about the optical as an outrage, contrary to many people. However that point had sadly not been broadly diffused.

Matt I know I seem somewhat obsessed with the energy of the detected neutrinos – because I am. It’s a long story and I’m not going to bore you with it. I’m just trying to eliminate certain possibilities that I have come up with. There aren’t that many possibilities and the others are in the TeV or Multi-Hundred-TeV range, but one is at 1.47 GeV and happens to reproduce the 2007 MINOS finding of v/c-1 = 5.1×10^-5 for 3 GeV muon-neutrinos, below 1.47 GeV v/c-1 = 0. Another is at 16.01 GeV, and again below that energy v/c-1 = 0. I would like to eliminate both these possibilities if the OPERA & ICARUS data let me. My problem is do I eliminate these possibilities based on a “Corrected” OPERA and an ICARUS of unspecified energy. I just wanted you to understand why I keep going on about this. I promise I won’t bring this up again.

Earlier in the month Matt mentioned: “Plus she reads this website, which I appreciate very much.” I foolishly assumed she was a he until I read your comment concerning Eugenie Samuel Reich. Anyway, for anyone interested on the latest at MINOS (a U.S. neutrino detector) Ms. Reich wrote an interesting article in NATURE entitled “Particle physics: A matter of detail”.

At this point I only have one question: If, back in September 2011, or again in November 2011, OPERA had published its results without any mention of the energy of the neutrinos it had detected would we be satisfied with that? Then why are we satisfied when the March 2012 ICARUS paper makes no mention of the energies of the 7 neutrinos it detected?

Mainly because ICARUS already published their neutrino energy results in a separate paper. Sure, I’d like to know the energies, but (1) we already know the distribution of the energy of the neutrinos in the beam from CERN, and (2) ICARUS confirmed in their previous paper that these energies haven’t changed in flight. I know the energies of the specific 7 neutrinos can’t be too low or ICARUS would have failed to detect them. So they are almost certainly above 10 GeV, which is really all we need to know.

A technical question, our of curiosity (if anyone knows) – is the fact that the time delay in proper configration is almost exactly an integer number of microseconds a design feature or a coincidence? (Not that this makes a difference to the process, of course.)

Cooincidence, I’m sure.

Timing and triggering errors happen all the time in physics experiments. Sometimes we spend days before we realize that something silly like the caretaker’s lawnmower is creating interference with our badly shielded cable. I think people are exaggerating in their minds the competency of the research team here. They might be recalling movie images of NASA missions or of the LHC experiment in “Angels and Demons”. In real life, a lot of the actual research, even on projects such as this, is mainly carried out by Ph.D. students and post-doctoral researchers in their mid to late twenties. They are experts in science, not engineering. These are not missions to the Moon or Mars, put together by a team of expert engineers.

Heck, in the photos above, that looks like one of the bog standard PCs here in our tiny lab! Even researchers like myself forget just how crude and cobbled together some of these top experiments can be. But it makes some sense when you realize that this is fundamental research (never been done before) and that research budgets can be extremely tight.

People forget that fundamental research sususally

Just one question… General relativity does have some solutions like Alcubierre metric, in blatant theory that perhaps around neutrino one such can form for extremly short amount of time, such object should not even be detactable unless the “buble” collapses. So neutrino would only fly FTL for small amount of time, and in the end average speed on large enough distance would be subluminal.

Is there any imaginable way how to measure if something like that could not realize itself?

It is not clear the solutions proposed by Alcubierre are physically realizable; they are at best controversial. Moreover they require huge amounts of energy, and presumably would not occur spontaneously in a terrestrial laboratory.

Actually I find that he indeed has a degree on master level from Poznan university, the physics faculty:

I find when I google him the following:

“it began when I had graduated from the Poznan* University in Physics and after

I married my wife Krystyna w 1971. My wife ‘dragged’ me to Szczecin where I started

to work in secondary school as a teacher of physics and astronomy.”

To Strassler: You claim here in public that he is a “self-proclaimed” physicist. That is a very hard personal attack against one of your fellowmen, considered that he say that he is a physicist. Before I conclude on this matter I must ask you to prove your statement that he does not have a higher university degree in physics. If he has a university degree in physics and you despite of this continue to say that he is a self-proclaimed physicist then you are wrong in every possible way. You just can’t say that only on the ground that you strongly disagree with his theory. Then you have to say that: He has a higher formal degree in physics from a university, but that you do not agree with his theory. Then he has every right to say that you have a higher degree in physics but that he does not agree with you in different physics questions.

I wrote nothing about the reference system. There is sqrt(s) [GeV].

I am a physicist. I do not try to advertise my theory. I just try to explain the experimental data concerning the superluminal speeds of neutrinos within coherent model.

The rest masses of the muons, pions and W bosons are quantized so there appear the three different speeds of the superluminal neutrinos as well. The described first threshold for energy (about 3.6 GeV) appears in the QCD as well and concerns the function R(s) = f(sqrt(s)). For energies higher than 2 GeV and lower than about 3.6 GeV the R(s) = 2 whereas for energies higher than 3.6 GeV and lower than 50 GeV the R(s) is 3.8 – 3.9. This means that at least partially the R(s) function is the staircase-like function. That means that sometimes energy is observed-independent.

It is your website so if you wish I can stop posting there my explanations.

Sincerely,

Sylwester Kornowski

You are self-proclaimed. And you are wrong about the science on so many levels there isn’t much point in discussion, I am afraid. The square root of “s” is not energy; it is the invariant mass of the electron-positron system. It is only energy in the reference frame of the laboratory that ran the electron-positron experiment. If you had been living on the moon, watching the earthlings do the experiments to measure R(s), your measurement of s would have been the same, but your measurement of the electron and positron energies would have been different.

The Everlasting Theory shows that the function describing how the neutrino speeds depend on their energies is the staircase-like function. There are only the three stairs. For energies lower than 3 – 3.6 GeV we obtain the maximum speed equal to 1.000071c and this value should be observed in the MINOS experiment. For energies from 3 – 3.6 GeV to 191 -227 GeV we obtain the maximum speed equal to 1.0000239c and this value should be observed in the OPERA experiment. We know that it was! For energies higher than 191 – 227 GeV we obtain the maximum speed equal to 1.000000002c and this value should be observed in the supernova SN 1987A explosion. We know that it was.

Now there appears following question. Why we obtained the correct results, i.e. consistent with the generalized theory of neutrinos described within the Everlasting Theory, in the OPERA experiment in spite of the cable failure? And the answer is obvious. I claim that the next experiments will confirm that the OPERA results are correct. In the ICARUS experiment, the density of information was much, much lower than in the OPERA experiment so we should not take such results seriously.

The superluminal neutrinos appear only when the weak decays of the muons (the MINOS experiment), relativistic pions (the OPERA experiment) and the W bosons (the SN 1987A explosion) take place inside the baryons i.e. inside the strong fields. We should not shorten the neutrino impulses because for sufficiently short impulses we can eliminate almost all superluminal neutrinos.

Everyone reading this should know that what you are describing is your own personal theory and that you are not a professional physicist. The fact that you would suggest that neutrinos have three different speeds, one for one range of energy, a totally different one for a second range of energies, and a totally different third one for a third range of energies, shows you know very little about nature. Energy is observer-dependent; so is speed; and the way they depend on each other makes it impossible to make your suggestions mathematically consistent.

Furthermore, your second paragraph shows you haven’t understood what I wrote. The cable problem was also present for the long neutrino pulses. And your times scales are off by many orders of magnitude. What you call “short” pulses is still incredibly long compared to the time scale for things to happen inside of baryons. The effect you suggest is completely unphysical. OPERA simply hasn’t had time to recalibrate the OPERA-1 measurement yet.

But you’ve had your say. And it’s here, on permanent record, for all to see.

This website is for the purpose of explaining mainstream understanding of physics to non-experts. Further advertising of your personal “Everlasting Theory” on this website will be deleted.

The problem started with the equipment manufacturer cramming as many miniature connectors as possible in the smallest space. Unless you have a three year-olds hand size, or follow a left to right cable installation procedure (for a right handed tech), where you have some room to work, stupid things like this happen.

If fibers have to be removed for checking, in a cable jumble like this, the fibers used probably should have hex type (SMA-905), instead of knurled backshells. Then, at least , one can use a fiber wrench (Ocean Optics FOT-SMAWRENCH) to give you some room to set the connector.

I’ve also seen plenty of problems in the past, with the centering and alignment of optical fibers in the connector, because of holes in the bushing, inconsistent with the fiber size. And this from a major manufacturer whose QC was less than optimal. Only after I started having screwy results with spectroscopy did I think of checking the fiber ends under a microscope. Let alone that the fiber were custom built for me.

Incoming QC checks should be a part of an experiment like this. Everyone falls prey to mistakes like this.

I have photos illustrating the problems, to substantiate, if Prof Strassler is interested..

Can you clarify what you have photos of?

There is something with this cable-issue that STINKS [Crude remark edited by host]. I can’t point my finger on to where excactly the smell comes from, but there is definitely a smell here.

The cable failure is so corny, trite and simple. I know that even my 82 year old mother knows that the cables must always be checked first when something is wrong on her television. Don’t give me any more of the b.s. regarding that this is sooooooo….. tricky to figure out in big and advanced experiment like the one that OPERA performed. IT IS NOT. I read that also Prof Strassler think so even if he writes it in a far more polite way than me. A sad but necessary consequence of this fact is that it makes people begin to speculate. What really happened ? No one can never more convince me that it was actually the cable that was a bit loosely screwed and that they really had forgotten to check this before they went public. As you all have commented on , OPERA’s arxiv paper from november 2011 gives a long chapter that deals solely with the optical fiber cable and the master clock. No one can convice me that Antonio Ereditato and Autiero did NOT had methods that would capture a fault in this cable as long as it was one of the most important part of the experiment and paper. They just can not be that stupid. It is not possible.

I have now read quite a lot about the people in the leadership behind the Opera collaboration. And there is one person, whom I will not mention by name, that makes me wonder. The person whom I am speaking of here is todays hero. Yes. This person’s reputation rose significantly as a direct consequence of the cable scandal. In this context I feel sad to see the pictures of the two guys Antonio Ereditato and Autiero, (they look so nice and smiling), that resigned and got their reputations damaged.

Conclusion: Is there an untold story behind this scandal ?

You are way too suspicious. You both overestimate and underestimate people in this comment.

The thing that should convince you that it was the cable is the data that I showed in this article (and the one to which it links). I don’t know who checked the wires and took the photos and studied the cable effect, but I am sure it wasn’t just one person involved — that’s way too much work. So there was team of people who did the timing check, found the cable, screwed it back in, redid the timing check, and saw the shift in the time. You can’t make a conspiracy there. Then there’s the muon comparison data from combining LVD and OPERA. A whole team from LVD is involved; the comparison of OPERA data and LVD data on the muons is basically of public record. What, are you suggesting that some people in LVD moved their data around to make something look nice at OPERA? We *know* from LVD’s data that OPERA’s timing went off in 2008, and stayed that way through 2011.

Are you suggesting someone sabotaged the experiment? No idiot would sabotage the experiment in this way, back in 2008; they would have had every reason to expect the problem would have been found over the ensuing three years. And no one back then could have known that the measurement was going to be carried out start to finish; it took a heck of a lot of work between 2008 and 2011, and it could have failed for other reasons.

Are you suggesting the cable failure story was made up to protect somebody? Well, aside from the fact that there’s all this data supporting it, if you wanted to make something up wouldn’t you make up something less embarrassing-sounding?

Now, you say “the cable failure is corny, trite and simple”. Well, let me tell you something. When experiments fail, it is often something corny trite and simple — a right-under-your-nose problem. Where do you think the smartest people make their mistakes? Two places: (1) something extremely complicated that only somebody with tremendous intelligence and knowledge would have recognized, and (2) something so obvious that nobody realized that nobody had actually checked it. I’m sure the OPERA team checked their timing many different ways and many times. Somehow this particular failure mode escaped them; only they can tell us how, but it is completely believable. In my career, I have seen many measurements withdrawn, and at least three of them were due to something equally simple… the OPERA mistake is not by any means the most embarrassing case. So, believe it — history shows that extremely smart people can, occasionally, even as a group, do a single very bone-headed thing. And it only takes one mistake to doom an experiment. That is why experimental science is extremely difficult. You have to be perfect.

Finally, if you’re suggesting people are lying, think again. Making a scientific mistake, even a dumb one, is known to be a risk of doing experimental science, and the only price is painful embarrassment. Leading your experiment into a media frenzy and mismanaging the process is bad, and the price is having to step down from leadership positions within the experiment, but with the possibility of runnning other experiments in the future. But lying is scientific misconduct. If these people lied, they would be expelled from the collaboration, lose their grant funding, would never be permitted to run another experiment, and would potentially face severe penalties at their university. I think it very unlikely that anyone took that risk in such a highly scrutinized situation, amid a collaboration of dozens of people and with the eyes of the world watching.

Dumb mistakes happen all the time, and the price is temporary. Lying happens rarely, and the price is permanent.

By the way — since mistakes happen commonly, scientists in my field and nearby ones always insist on multiple confirmations of a result from multiple points of view before they take it too seriously and start to have confidence in it.

“After it [the master clock] fired with the laser pulse, at the start of each 0.6 second data chunk, it then drifted slightly during the next 0.6 seconds, by a total of 74 nanoseconds. Then it would be re-synchronized 0.6 seconds later (albeit incorrectly, due to the improper fiber connection) by one of the laser pulses coming down the fiber.”

This looks slightly imprecise; the master clock is never resynchronized. The drift is in the freestanding clock generator on the PCI card (the 10 MHz Vectron OC-050). That continuously drifts at the rate of 74 ns every 0.6 s. What gets reset is the DAQ FPGA’s rough counter, which is reset very 0.6 s from the repetitive signal output by the PCI card to the DAQ FPGA (the DAQ reset signal – this is derived from the problematic 1msec pulse and hence is not affected by the drift). The DAQ FPGA’s finer counter which counts in tens of nanoseconds, by definition, starts again at value 0 when the reset signal comes in.

I don’t understand Sioli’s correction (page 23) for the drift for the BB case: 124.1 x 10^-9 x 75.3 x 10^-6. Shouldn’t they go through each detected neutrino event for OPERA-2, figure out where in the DAQ cycle it was generated and individually apply the clock drift correction as: t-in-DAQ x 124.1 nsec/s? Or is that 75.3 msec actually sigma t-in-DAQ for all the BB events? Sounds too low considering the DAQ window is 600 msec (is that what you meant by BB events were all bunched at the very early end of the DAQ cycle)?

Continuously drifts? The Vectron OC-050 should be stable to 1 part in 1e10 per day. That is a lot less than 74ns per 0.6 seconds. The Vectron oscillator also has a 1 second drift specification. See http://www.vectron.com/products/ocxo/oc050_051.pdf

There are multiple clocks involved in OPERA timing. There is a reference clock on the PCI card that the optical fiber from above ground connects to. This clock is locked to the GPS derived signal on the fiber and is updated every 1 ms. There is also a master clock derived from a 20 MHz oscillator. This is a free running clock and is not locked to anything. It is the clock whose frequency was discovered to deviate by 124 ppb from nominal. At the start of the DAQ cycle (which is 0.6s in duration) the time kept by the reference clock is recorded and the time kept by the 20 MHz master clock is zeroed. Whenever an event happens, the time is recorded as the sum of the elapsed time kept by the master clock since the beginning of the DAQ cycle plus the reference clock time at the beginning of the DAQ cycle. The timing error from the reference clock is 73 ns, in the direction of making neutrinos appear to arrive early. For the bunched beam data (neutrinos bursts lasting a few ns) the average time of the events from the beginning of the DAQ cycle was 75 ms. This gives an average deviation (drift) of the master clock time of 124 x 10^-9 x 75 ms = 9 ns, in the direction of making neutrinos appear to arrive later. These add up to making neutrinos appear to arrive about 64 ns early.

“Drift” refers to the time difference measured by two clocks whose frequencies differ by 124 ppb, not a change in frequency. The oscillators are indeed stable but the frequency of the central clock’s 20 MHz oscillator is slightly different than what OPERA assumed it was. The reference clock on the fiber termination PCI card is very stable but was consistently off by 73 ns due to the loose fiber connector.

The oscillator isn’t 20 MHz, it is 10 MHz, multiplied on the board to generate a 20 MHz clock fed to the DAQ FPGA, which then again multiplies by 5 to generate the finer 100 MHz tick (the 10 nsec granularity timestamp). think tomacorp’s point is the OC-050, the oscillator-equivalent for this design, should have stayed at 10 Hz since its only standard frequencies are 5 Hz and 10 Hz. A 250 ppb deviation from 10 MHz is out-of-spec. A stable (precise) inaccurate value is of little help.

It might be premature to blame the manufacturer though–OPERA didn’t measure the OC-050 output directly. They put a scope on the DAQ FPGA, comparing the DAQ-reset to the GPS 1 ms trigger routed through the ROC/PMT back to the FPGA (Sirr, page 10). The problem could be anywhere on that circuitry within the board, or perhaps in board cooling.

Thanks AK. The 20 MHz clock is indeed just a 2x clock derived from the 10 MHz clock. I was confused because there was reference to a “20 MHz oscillator”. The OC-050 has an input that allows for frequency adjustment which can be pulled by +/-200 ppb minimum (see Electrical Frequency Adjustment in the data sheet). This could have been set incorrectly and would account for the 124 ppb deviation from nominal. Or the oscillator wasn’t trimmed properly at the factory. I don’t see any absolute frequency specification in the data sheet.

Yes, the OC-050 oscillator is intended to be adjusted by the user to the correct frequency. It should probably be locked to the GPS. Accurate frequency standards are built with many frequency control feedback loops inside of more loops. A source with long-term stability, such as Cesium, is used in the slowest loop, and this is combined with a wider bandwidth loop using something like Rubidium locked to it, followed by Quartz in an even wider bandwidth loop, etc. The whole system can be characterized with a phase noise frequency-domain measurement or a time-domain jitter measurement. The phase noise can be integrated to make a rough estimate of jitter. The amount of time-domain jitter is a function of the measurement bandwidth and measurement time. This corresponds to the frequency limits of the integration of phase noise.

I would lock the experiment’s oscillators together, and measure phase noise vs. frequency. Not many oscillator problems escape the scrutiny of a phase noise plot. Even though the experiment is in the time domain, phase noise is a more insightful and sensitive diagnostic tool, especially at low frequency offsets, which correspond to long measurement times.

Oh, ok, 75.3 msec is the average of t-in-DAQ for all the BB events, not the sum. Thanks, Eric. That is still bunched at the early end of the 600 ms DAQ cycle, but I guess the beam generator on the CERN side is also triggered based on the GPS clock time, correlating it to the DAQ cycle.

Autiero says “[they] now have an indication along that line [of explaining the result]” Very conservative. Seems more like he should have said “Mystery solved; we will rerun in May with better accuracy.”

http://www.nature.com/news/embattled-neutrino-project-leaders-step-down-1.10371

Matt, your blog, Nature News (largely Eugenie Samuel Reich) and Wikipedia are the only sources to have covered the OPERA sage comprehensively, unbiasedly, and amazingly accurately given public releases from Gran Sasso were often confusing and at times downright misleading. This is not a slur on the media (something Autiero seems to insist on even now); more a testament to the exceptional quality of three different ways of reporting (crowdsourcing guided by an effective expert, paid journalist, and wiki-style).

Thanks (both for your kind words and your help). I wonder who is behind the Wikipedia updates. Reich has done a very good job, I agree. Plus she reads this website, which I appreciate very much.

Wikipedia? Mostly nonexperts, I suspect, including me. The creator of the page and at least one consistent contributor was from Europe (seems like this got even more press there). The Wikipedia model requires reliable secondary sourcing, that is a news-outlet report, not a primary source, that is a physics paper only experts can discuss. That made it hard keeping out Elburg/Contaldi, and phrasing the “refutations” right–but comments from experts like you, Sergio Bertolucci (CERN research director), and Autiero (his objection to ICARUS was valid) helped balance the article. But OPERA did obfuscate; for example, Ereditato once said “They all signed” when asked about who all signed the v2 arxiv paper (reported by Dennis Overbye in NYTimes). That was false (even v2 had both new dropouts and old holdouts).

Largely the wiki was updated by those who wanted the result to be true, but were long-sighted enough not to delude themselves.

After all, one day we will play with much, much higher energies; energies which will dwarf today’s the way light-speed dwarf’s a car’s; energies at which today’s physics will be an approximation just as at near-light-speed Newtonian physics, precise and accurate in its day, is an approximation. After all, one day we will break the barrier.

Figure 4’s characterization of a “correct” rise time signal does not make sense to me, based on years of test experience at MIT, in the 60’s through the 80’s. There appears to be some distortion, or ringing in the signal, in my opinion.

“However, the statement from Eriditato suggests that the OPERA/LVD collaboration only occurred quite recently . . .”

Well, OPERA and LVD collaborated way back when to do inter-timing calibration (the exact same thing done this time around). This is mentioned on page 183 of “Astroparticle, Particle, Space Physics, Radiation Interaction, Detectors and Medical Physics Applications – Vol. 5” edited by Claude LeRoy, Pier-Giorgio Rengoita, Michele Barone, Andrea Gaddi, Larry Price, and Randal Ruchti. It is actually the report of a conference, the 11th conference in 2010 (but the inter calibration was done back in 2008 and all analysis done by 2009 – the footnotes talk of an “upcoming” paper in 2009):

http://preview.tinyurl.com/7sjwm5p

That time around they used the 2008 CNGS and atmospheric muon data (for CNGS data they compare the mean neutrino arrival time within a spill), but clearly the software etc would have been the exact same for 2009/10 atmospheric muon data. The Ereditato statement is true only if narrowly interpreted as “the OPERA/LVD collaboration for inter-calibrating with 2009/10 atmospheric muon data occurred only recently . . .” That begs the question why, especially since a well-established procedure to do so was already in place.

New Scientist has some quotes from Autiero as well. I think of Autiero as the person more directly involved; after all Ereditato was the PR person–and blaming a physicist for a PR failure is probably not that serious an issue.

http://www.newscientist.com/article/dn21656-leaders-of-controversial-neutrino-experiment-step-down.html?DCMP=OTC-rss&nsref=online-news

Who exactly is Luca Stanco? He has been quoted extensively from day one, mostly opposing Autiero, but with no hints as to his actual role. As usual, I guess one has to dig deep to interpret the right way what anybody from OPERA says.

I don’t know any of the answers to your questions, unfortunately. None of these people is known to me personally, so I can’t read between lines. My reading of Ereditato is murky; I don’t know what he means, really, about LVD and OPERA’s collaboration. But I got the impression from what he said that the LVD/OPERA collaboration was not intended for this current purpose and some negotiation was necessary to make the current phase of the collaboration happen. That might well be a mis-reading…

Ereditato, as spokesman, is much more than just P.R. In particle physics experiments, a “spokesperson”, such as Fabiola Gianotti at ATLAS or Joe Incandela at CMS, is a leading scientist who is elected to this post but who during his or her term makes important decisions that affect and determine the direction of the entire collaboration. The buck does stop with him.

But my understanding (informal and not certain) is that Autiero, the physics coordinator for the OPERA experiment (which is below “spokesperson”), led the neutrino speed measurement effort from day one, starting several years ago. I’m quite confident that it is he who must have personally certified the timing measurements that proved faulty, and who decided on the priorities as to which things to check first and which checks to postpone til later.

And it is almost certainly the two of them who were substantially responsible for the decision to announce their “timing anomaly” result at a big and very public talk at CERN. Surely either of them could have blocked that idea. The talk at CERN was a huge mistake, which neither of them is willing to admit — in their statements to the press, they just blame everyone else, and noticeably take no responsibility for what is widely viewed as a gigantic blunder. That is why, in my view, for reasons having nothing to do with the scientific mistakes that OPERA made, their resignations from the leadership positions at OPERA are welcome.

Fascinating post, thanks.

Lesson 1: They did verify calibration. They spend a whole lot of time on it.

Lesson 2: I think the GPS-based synchronization approach has actually been validated with the ICARUS results. But, of course, the public interest wasn’t from that technical advance made by OPERA.

None of the suggestions would have worked in this specific case. Keeping the fiber bend radius large and taping it down, for example, wouldn’t have kept the fiber from being unscrewed. I don’t think the connector got unscrewed by just chance pressure and torque; it very much looks like somebody forgot to screw it back in after it was unscrewed. There is the question of why the system was messed with after the calibration was done. My presumption is somebody pulled the PCI card out, but this is something they should be able to verify (even if it happened 4 years ago).

I didn’t see anything that looked like calibration followed by verification in the description of the experiment. It looks to me like an unknown delay that is aligned with a known delay. For something to qualify as a calibration, it needs to be traceable to known standards, and it is valid only for as long as the equipment is expected to remain stable. Changing cables or swapping cards means that the an old calibration is no longer valid, unless each swapped piece is verified and has its additional uncertainty added to the error budget.

The lack of cable management in the photos shows reason to remove the fiber. Perhaps when cleaning up the cables, the fiber was disconnected because that was the easiest way to untangle things. If it was taped down, it would be less likely to be messed with. The photo shows the keyboard and mouse cables near the fiber! You have to expect users to pull on the keyboard cable, and the fiber is not nearly rugged enough to survive in this cabling environment. Perhaps someone broke the old fiber and improperly replaced it with a new one.

Changing the PCI card would require recalibrating and reverifying, especially with the slow photodetector that was used. The scope waveform shows a 1 volt rise in 50ns when it is working properly! This makes the whole setup much less accurate than it needs to be, because a relatively small amplitude error becomes a large time error.

Even unscrewing and reconnecting the fiber can cause a big change in the signal, for example 10% or so. It needs to be cleaned every time, no exceptions. The connector repeatability is still not that good, and combined with the slow photodetector amplifier, I would expect larger errors than the error bars shown in the graphs.

The photos are quite revealing about other aspects of the experimental setup and measurement technique: Corroded BNC connectors, messy wiring, converting slow analog signals to digital inside an old office-grade PC (the PS2 mouse and keyboard connectors are a giveaway – possible the whole PC was swapped!). I like to build things out of junk, too, but I don’t make extraordinary claims based on it.

This blog really shows the inside of the sausage factory! I had always imagined that particle physicists were neat freaks, more like photonic system engineers. Maybe there was a lab tour between December 6 and 14, and someone had to clean up?

The only thing I can assure you is that theoretical particle physicists, as a group, are generally not neat-freaks. I’m pretty bad, but not the worst.

Experimental particle physicists probably vary quite a lot.

I am grateful that bloggers and commentators were not continuously informed as I learned these lessons the hard way!

(This is reposted from last night by request)

Lesson 1: Calibration is not enough. The calibration needs to be verified with an independent, different type of measurement. This magnitude of error could have been easily detected with a primary frequency standard. Check out leapsecond.com for interesting and amusing application examples of this type of standard.

Lesson 2: Beware of complex, unverified schemes to use something convenient (a GPS clock) in an inconvenient way (far from the antenna.) Comparing the experimental graphs to the accuracy available from a primary frequency standard, I wonder about the whole synchronization approach.

Lesson 3: Measure digital signals with an analog measurement, especially if they are clocks. It is too easy to plug in a digital signal and assume that if it seems to work at all, it is pristine. A quick measurement of the analog signal reveals the garbage when you have this type of problem. If you have to move the connector after the analog measurement, there is still potential for error. Use an optical splitter or switch to allow the measurement without moving the connector.

Lesson 4: Single mode fiber and FC connectors are much less rugged and reliable that electronic connections. Plan for them to fail a lot. Keep bend radius large on the fiber, and tape it down. Thoroughly clean the FC connector after every use. Cut the connectors off from even slightly damaged cables so that they don’t get re-used (I started doing that late one night. After that I got home on time much more often!)

I must say that it is very hard to belive that the OPERA-team, which clearly is smart people all though humans, did not check whether the cable was properly screwed in before they published their result. This is clearly spooky. I mean if I wants to measure something and gets an unusual result, I will of course check the cables etc. before I belive the result. I just can’t belive that fact that the OPERA-team did not check the cables before they went public. Sorry in advance if I am the only empty head in here 🙂

I do find it hard to believe, but let me give you an example of how this kind of thing can happen. Note: PURE SPECULATION FOLLOWS!

Professor to Postdoctoral Researcher: I want you to take the two graduate students over there and walk the entire cabling chain: check every cable visually and make sure it looks perfect: plugged in properly, not bent, not frayed, everything looking absolutely pristine, and keep a careful record.

Postdoctoral Research to Graduate Students: Ok, we have to go check that all the cables look ok.

Grad Student going through these cables notices this one isn’t in all the way, but knows this is far enough in that if it were a cable carrying an ordinary wire it would work fine, so not understanding the subtleties of fiber optic cables, doesn’t think it’s a problem.

OR

Grad Student says to Postdoc: Hey, this cable isn’t in all the way.

Postdoc to Student: Oh, that’s in far enough [not realizing that for a fiber optic cable in a timing chain, that’s NOT enough.]

THEN

Postdoc to Professor: Ok, we checked all the cables.

In other words, I personally find it more likely that it was checked, but by someone who was insufficiently familiar with the fact that a fiber optic cable is so sensitive.

END OF PURE SPECULATION

What is disturbing is that probably nobody double-checked. With such a critical result on the line, you would have thought that the more general check of measuring the delays on all the main lines, especially the timing line from Gran Sasso’s GPS device into OPERA, would have been a high priority. But we know now that they did not repeat the check from 2007. This blind spot is hard to understand.

Note, however, that this is how experiments often fail; somebody missed one detail that everyone else thought had been checked, because they were told it was checked and they trusted that it had been checked carefully. Notice that it would appear (preliminarily) that the area of the clock was the only place where a mistake was made, because OPERA-2 is now giving an expected result. This was a hard measurement, and that they have only these two mistakes in the area of the clock is pretty impressive. What’s not impressive is that the leadership made a big deal out of it and had people spend their time checking high-level issues first before being thorough about the low-level issues.

Thanks a lot Prof Strassler for your excellent answers and blog in general. It is so fun (extremely interesting and because of that also fun) to have the oppurtunity to get insight in your and the physicists world. Wish you a very nice easter 🙂

I think the delayed double cosmic muon events has something do with the fact that cosmic muons and neutrinos seemingly get generated in pairs? One cosmic ray generates a bundle of particles including muons and neutrinos. Once in a while, the neutrino would hit a rock and generate another muon. There is a chance the OPERA detector detects both. Of these two muons, if neutrinos are superluminal, the neutrino-muon should arrive before the atmospheric one. See http://arxiv.org/abs/1109.6238. This check actually would have caught the problem since it relies on just local underground timing. Once again, my physics-naive interpretation, but the terminology does seem to fit what is in the paper.

In their May run, OPERA is going to fix the +/-25ns jitter from the 25MHz clock (they seem to be shooting for 1 ns accuracy per event – is that a 1 GHz clock?) In fact they seem to be shooting for a couple of nanoseconds error. I guess they need to show something better than what Icarus has already achieved to justify the trouble.

As to “you have to bend way over backwards to call it a ‘timing anomaly’ again and again” Autiero actually published a paper suggesting we have failed to detect neutrinos from Gamma Ray Bursts (presumably with IceCube) because they are superluminal and would have passed us by awhile ago: D. Autiero, P. Migliozzi and A. Russo (November 14, 2011). “The neutrino velocity anomaly as an explanation of the missing observation of neutrinos in coincidence with GRB”. Journal of Cosmology and Astroparticle Physics 11 (026). arXiv:1109.5378. Bibcode 2011JCAP…11..026A. doi:10.1088/1475-7516/2011/11/026.

But I can’t blame Autiero; on the other side, people were claiming “refutations” of the experiment in a heads-I-win-tails-you-lose way. Nobel laureates, in fact. Van Elburg was garnering a lot of press with an arbitrary calculation that added up to 60 ns. While you rightly shine a spotlight on the OPERA handling of the situation, I do think the “objectors” to the result, while right on the facts (except Elburg and Contaldi), did add to the confusion in their zeal to clear it.

25MHz clock –> 20 MHz clock

Well, Dr. Autiero is going to suffer the most from this debacle. A lot of blame — maybe most of it — is on his shoulders. He should have been breaking his back to check the timing chain at OPERA rather than writing papers on Gamma Ray Bursts.

I don’t think I would put the Cohen-Glashow paper in the same class as Van Elburg. Cohen-Glashow is correct. If they had replaced the word “refute” with “raise questions about” it would have been better, I agree (and as you probably know, this blog objected consistently to the word “refute” until ICARUS’s most recent measurement.) But still — it’s an excellent paper. Van Elburg was just embarrassing (at least I hope he is properly embarrassed.)

Looking through a bit more, I think that arXiv paper is the “delayed double cosmic muon” issue alright. The second author (Francesco Ronga) is from INFN; so OPERA likely saw the paper. (The first author is Teresa Montaruli – U Wisc. Prof, IceCube collaborator and associate at INFN, though in the paper she has Univ. of Geneva as affiliation). I guess the energy range of the associated neutrinos is probably different though.