Matt Strassler [July 8, 2012]

One remarkable thing about the discovery of the Higgs-like particle is how quickly it occurred, relatively speaking. Seeing the data from December 2011, when the first hints appeared at around 125 GeV/c2, and knowing what the Standard Model predicts for a simplest Higgs particle of that mass, it was not obvious that by July 2012 that the evidence would become strong and almost uncontroversial. This was for several reasons, which I’ll outline in a moment. But a combination of a lot of skill and a little luck made for an unambiguous success. In this article I’ll give you a little sense of what this was all about.

What were the challenges that the experiments faced between December and July?

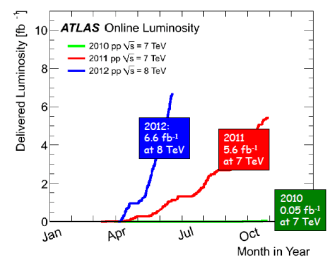

First, they needed data to come in at a rate that was fast enough that three months of 2012 would provide at least as much data as all of 2011. This required that the Large Hadron Collider [LHC] operate with a higher number of collisions per second than ever before, and do so with high reliability. The LHC was set to operate at 8 TeV of energy per proton-proton collision for 2012, instead of last year’s 7 TeV. While this was not a huge jump, there was always a risk that the change would cause the LHC to have some trouble early in the year, and that some time would be lost, meaning that the data that arrived by late June would be insufficient.

Things started off well; a high collision rate was achieved in April. However, after a little break for a few adjustments, the LHC started having some difficulties and became a bit unreliable. Weeks went by, and it started looking as though the 2012 data would not reach 2011 levels by late June. But the accelerator physicists and engineers worked very hard and solved the problems; the right adjustments were made and the reliability increased dramatically. From then on, until the middle of June, the data poured in a flood, fast enough that the total data rate for 2012 through June ended up not only matching all of 2011 but exceeding it by 20%! For making possible this final deluge of critical data, the operators of the LHC deserve a huge round of applause!

Second, the experiments had to deal with the challenges of a much higher collision rate, which is achieved by having multiple proton-proton collisions occur almost simultaneously, all overlapping within the detector. This causes a problem called “pile-up”, which I have described here. Suffice it to say that increasing pile-up makes the environment for making measurements increasingly difficult. For various technical reasons, the LHC is operating with more simultaneous collisions, and therefore more pile-up, than the ATLAS and CMS detectors were designed to handle, and so there were great concerns that some degradation in the quality of their measurements would occur, partly nullifying the benefits of the increased data rate.

But the experimenters set to work… and as far as I can tell, every place where there was a significance risk of loss of data quality in the Higgs search due to pile-up, they came up with new methods that largely eliminated the problem. Their ability to take data in 2012, despite the higher collision rates, matched what they could do in 2011. For this remarkable achievement, the ATLAS and CMS experimentalists deserve a round of applause too!

Third, the experimenters did not simply sit on their hands while the data was coming in during the first months of 2012. They had been hard at work thinking of ways to make their measurements more accurate and at making sure they captured a large fraction of the Higgs particles that are produced. Where possible they applied these methods, retrospectively, to the 2011 data, and then combined it with the 2012 data. In many cases they obtained improvements of 10 to 40%. Another round of applause, please!

A fourth challenge was that with the new data coming in at 8 TeV, last year’s computer simulations of 7 TeV data could not be used and needed to be redone; and with such high data rates, a huge increase in computing efficiency was needed. Both experiments made major improvements here, without which the results could not have been finished without compromises in the quality of the measurements.

So, with 20% more collisions thanks to the LHC engineers, producing 30% more Higgs particles thanks to the 8 TeV energy versus 2011’s 7 TeV, and with the improvements that allowed efficient and high-quality data taking at higher data rates along with improvements in the measurement techniques that bought 10% here and 30% there, the measurements that we saw in July were a good step better than a mere doubling of the 2011 data that had been the rough expectation.

And then there was a bit of luck. For some reason that nobody knows yet — maybe it was just chance, or maybe the (very difficult!) calculation of what was expected from a simplest type of Higgs came out a little smaller than it should have, or maybe the Higgs is not of the simplest type (a Standard Model Higgs) and has properties that make it a little easier to discover, or maybe all of these at once — more Higgses showed up than expected in precisely the places that ATLAS and CMS could most easily find them. Of the five ways that the Higgs particle can decay that can be searched for at the current time, the two easiest are its decays (1) to two photons and (2) to two lepton/anti-lepton pairs (shorthand: “four leptons”). These are rather easy because they appear as a clear and narrow bump in a plot, rather than as a broad enhancement of what one expects to see in the absence of a Higgs particle. (See this link for a detailed discussion of the issues — though out of date in its details, its main points still hold and should still be instructive.) Three of the four measurements — the ATLAS two photon measurement, the ATLAS four lepton measurement, and the CMS two photon measurement — produced a larger peak than one would have expected from a Standard Model Higgs particle, by a factor that is less than 2 overall, not big enough to be statistically significant as an excess over the Standard Model, but big enough to be worth keeping an eye on. The CMS four lepton measurement came out just about as expected. More precisely

- ATLAS photons: an overall factor of 1.9 +- 0.5 higher than Standard Model for Higgs of 126.5 GeV (will be slightly less excessive for Higgs of 125.5 GeV)

- ATLAS leptons: 10.4 +- 1.0 events expected for Higgs of 125 GeV, (5.1 +- 0.8 if no Higgs), 13 observed

- CMS photons: an overall factor of 1.56 +- 0.43 higher than Standard Model for Higgs of 125 GeV

- CMS leptons: 6.3 events expected [my estimate off the plot] for Higgs of 125.5 GeV (1.6 if no Higgs [again my estimate]), 5 observed

The reason that ATLAS and CMS expected different numbers of leptons is that they used different techniques to select which collisions to use for their final results. (Of course they each made the decision as to what technique to use before they looked at the data, using simulations! You never should choose your technique based on the data itself, since you’ll bias the result if you do that.)

These excesses helped the result from both ATLAS and CMS look a bit more convincing. Statistical analyses are always necessary to tell you whether a result might be less statistically significant than it looks more informally; but conversely, looking more informally is always necessary to tell you whether a result might be less reliable than statistical arguments might tell you. The results from CMS and ATLAS could have been just as significant statistically, but looked a lot less convincing. But as it happens, they pass both statistical tests and various cross-checks, including checks by eye. And for this we have to thank hard work by many, many people — and a little luck from nature. Otherwise we might have had to wait til September, or even longer, with a long summer of back-and-forth debates, full of confused and confusing media stories. Most of us (especially those of us serving communicating with the public and the media) are grateful to have a more straightforward situation!

8 Responses

Will the peak in the 2 photon channel get taller and taller with more data? Although the result is convincing it is a rather small peak over a bumpy background at this stage.

It won’t get larger relative to the background; the signal and background will get larger at the same rate. But the bumps in the background will become (relatively) smaller, so eventually the bump will be easier to distinguish over what will be a smoother background.

This is not the end of the search for the complete validation of the Standard Model. More revelations ahead, I’m sure.